NLP (Natural Language Processing) eliminates the technical barriers that separate business stakeholders from the debugging process.

With NLP-powered tools, teams can now describe test scenarios in plain English and watch them transform into comprehensive automated test suites.

This democratization of test creation enables business analysts, product managers, and QA engineers to collaborate seamlessly without technical bottlenecks.

Understanding NLP-Based Test Automation

NLP test automation leverages Natural Language Processing to enable computers to interpret, understand, and respond to human language in the context of software testing. Unlike traditional automation that requires scripting expertise, NLP-based approaches allow testers to write test cases using everyday language.

This technology processes natural language inputs such as user stories, acceptance criteria, and test scenario descriptions, converting them into executable test cases automatically. The result is a democratized testing environment where technical and non-technical team members can participate actively in QA processes.

Core Components of NLP Test Creation

1, Requirements Analysis

NLP systems extract requirements from user stories, functional specifications, and acceptance criteria. By understanding context and intent, these systems identify test conditions, expected results, and necessary test data.

2. Test Case Generation

Once requirements are analyzed, NLP algorithms automatically generate structured test cases. This includes creating positive scenarios, negative test cases, edge cases, and boundary conditions without manual intervention.

3. Contextual Understanding

Advanced NLP models maintain context throughout multi-turn chat and complex scenarios. This ensures that generated test cases accurately reflect intended application behavior, particularly in conversational interfaces and voice-controlled applications.

How NLP Processes Test Requirements

The transformation from natural language to executable tests follows a systematic approach. First, the system performs tokenization, breaking down requirements into smaller units like words and sentences.

Then, it applies named entity recognition to identify key elements such as user roles, actions, and expected outcomes. Through sentiment analysis and dependency parsing, the NLP engine understands relationships between different components of requirements.

This comprehensive analysis ensures that generated test cases cover all aspects of specified functionality, including scenarios that might be overlooked in manual testing processes.

Key Methodologies and Techniques

| NLP Technique | Application in Testing |

| Tokenization | Breaking requirements into testable components |

| Named Entity Recognition | Identifying users, actions, and system elements |

| Sentiment Analysis | Understanding expected emotional responses in UI text |

| Text Classification | Categorizing requirements and test types |

| Dependency Parsing | Mapping relationships between test steps |

| Lemmatization | Normalizing variations in requirement phrasing |

| Word Embeddings | Capturing semantic meaning for context-aware tests |

Advanced Test Generation Approaches

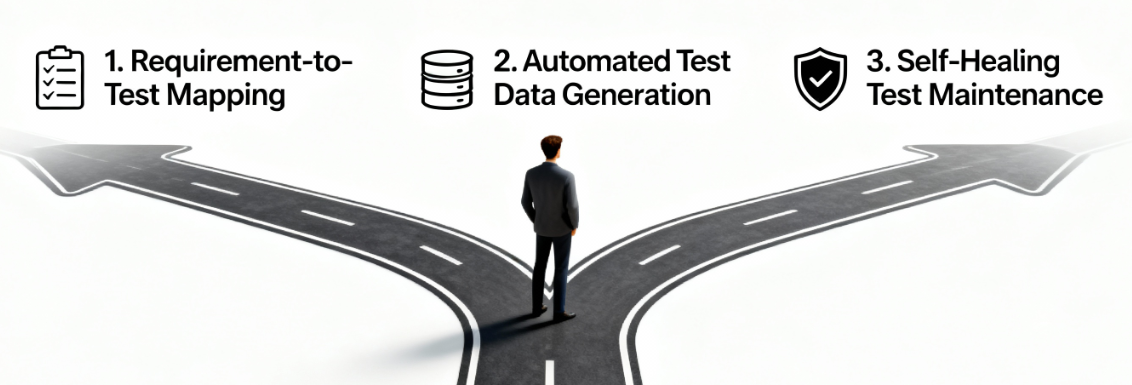

1. Requirement-to-Test Mapping

NLP systems analyze natural language requirements and create comprehensive test coverage metrics. Research indicates that NLP-based test automation achieves 92-97% accuracy in translating unstructured requirements into structured test cases.

2. Automated Test Data Generation

Beyond creating test cases, NLP generates realistic test data from text descriptions. This capability ensures that test scenarios closely mirror real-world use cases, improving overall testing coverage quality.

3. Self-Healing Test Maintenance

Modern NLP-powered platforms incorporate self-healing capabilities that automatically update test cases when application UI changes. This adaptation happens without manual intervention, significantly reducing maintenance overhead.

Intelligent Test Prioritization

NLP algorithms analyze historical test data and bug reports to prioritize test cases based on risk and likelihood of defect detection. This intelligent prioritization enables teams to focus resources on the most critical test scenarios first.

By examining patterns in past test executions, NLP systems identify which areas of the application are most prone to issues. This data-driven approach to test prioritization results in more efficient debugging cycles and faster time-to-market.

Benefits and Measurable Impact

Quantitative Improvements in Testing

Organizations implementing NLP-based test automation report significant measurable benefits across multiple dimensions. Studies indicate that teams using NLP see a 78% improvement in test coverage compared to manual testing approaches.

| Metric | NLP-Based Testing | Manual Testing |

| Test Case Accuracy | 92-97% | 75-85% |

| False Positive Rate | 8% | 12% |

| Test Consistency | 98% | 85% |

| Code Coverage Improvement | 15-20% higher | Baseline |

Efficiency and Speed Gains

1. Reduced Test Creation Time

NLP-powered platforms reduce test case generation time from approximately 24 hours to just 5 minutes per case. This represents a 99% reduction in manual effort, allowing teams to scale testing operations without increasing headcount proportionally.

2. Faster Testing Cycles

Automation driven by NLP provides real-time feedback, enabling faster iterations. Teams report that testing cycles which previously took days can now complete in minutes.

3. Cost Reduction

Integration of reinforcement learning and NLP in test automation reduces overall testing costs by up to 46% while simultaneously improving test quality and coverage.

Enhanced Collaboration and Accessibility

NLP democratizes test creation by removing technical barriers, allowing business analysts to write test cases in plain language directly within testing tools. This eliminates the need to translate requirements into code, reducing friction in the QA process.

This accessibility improvement leads to better alignment between business goals and quality assurance efforts. When stakeholders can understand and contribute to test cases, governance over product quality strengthens significantly.

Product managers and non-technical team members can now participate actively in the testing process, creating acceptance criteria that convert directly into executable test cases.

Challenges and Solutions

| Challenge | Description | Solution |

| Language Complexity | Human language variability and ambiguity | Use diverse datasets covering dialects, slang, and linguistic variations |

| Context Maintenance | Difficulty maintaining context across conversations | Implement continuous testing and AI-powered context tracking |

| Ambiguity Handling | Systems struggle with multiple interpretations | Deploy AI testing with clarification mechanisms for ambiguous inputs |

| Scalability Issues | Maintaining efficiency as complexity grows | Incorporate performance testing and load management strategies |

| Domain-Specific Accuracy | Models achieve 65-75% accuracy with complex terminology | Implement standardized glossaries and style guides |

Overcoming Technical Limitations

1. Handling Real-World Input Variability

Users interact with applications in unexpected ways, which can lead to misinterpretations by NLP systems. Randomized debugging simulates unpredictable user interactions, ensuring robust handling of edge cases.

Organizations report that systematic edge case analysis and parameterized testing for boundary conditions achieves edge case coverage equal to or better than traditional scripting approaches.

2. Multi-Language Support

Transfer learning techniques are bridging language gaps in NLP testing. By 2026, approximately 70% of new applications will use NLP-based testing tools, up from less than 20% in 2023.

3. Quality Assurance for NLP Tests

While NLP automates test creation, validation and code auditing remains critical. Best practices include manual review of generated tests, domain expert consultation for accuracy, and continuous feedback loops for model improvement.

Panto AI and NLP-Based Test Creation

Revolutionizing Mobile QA with Vibe Debugging

Panto AI represents the next evolution in mobile application testing through its Vibe Debugging platform. This end-to-end solution seamlessly integrates dynamic code reviews, comprehensive security checks, and intelligent QA automation into a unified workflow.

At the heart of Panto AI’s innovation is its sophisticated use of Natural Language Processing for test creation. The platform understands requirements expressed in plain English or automatically generates test cases based on organizational context, eliminating barriers between business requirements and technical test implementation.

Natural Language Test Execution

Panto AI Execute allows quality assurance teams to describe use cases with codeless mobile automation, and the AI agent navigates through the mobile app to execute each step automatically.

This NLP-driven approach transforms how teams interact with testing tools. Instead of writing complex test scripts, QA engineers simply describe what they want to test in conversational language.

The AI agent interprets these instructions, breaks down complex features into executable steps, and runs them on real devices or emulators. This capability bridges the gap between human intent and machine execution, making test automation accessible to both technical and non-technical team members.

From Execution to Automation

Once a test flow executes successfully in Panto Execute, it can be converted into fully automated tests through Panto Automate. This transformation eliminates the need for repeated manual execution while maintaining the benefits of natural language test creation.

The generated automated tests are built on a proprietary framework that’s language, OS, device, and LLM-agnostic.

Panto AI’s automation framework doesn’t rely on traditional tools like Playwright, Appium, or Selenium. Instead, it uses visual recognition, structural analysis, and contextual understanding to identify and interact with UI elements.

Self-Healing Intelligence Through NLP

One of Panto AI’s most powerful features is its self-healing capability, which leverages NLP to maintain test relevance as applications evolve.

The self-healing system re-runs affected steps, adapts the automation logic, and updates test cases accordingly. Users receive notifications about what changed and how the system adapted, maintaining transparency while eliminating manual maintenance overhead.

This intelligent adaptation is possible because Panto AI understands test intent through NLP rather than relying solely on brittle element selectors. When a “Submit” button becomes a “Send” button, traditional tests fail, but Panto AI recognizes semantic equivalence and continues execution.

Context-Aware Testing with Knowledge Base

Panto AI incorporates a Knowledge Base feature that serves as a context-aware store for organizational testing intelligence. Over time, Panto AI builds memory from these inputs, making test execution faster and smarter.

This contextual learning ensures that generated test cases align with specific organizational patterns, business rules, and application behaviors.

Integration and Enterprise Readiness

Panto AI seamlessly integrates with CI/CD pipelines, enabling automated test execution during deployment cycles. For enterprise teams concerned about security and compliance, Panto AI offers CERT-IN compliance and on-premise deployment options.

This ensures that sensitive application data and test artifacts remain within organizational security boundaries. Combined with real-time reporting, Panto AI provides complete visibility into mobile app quality.

Future Trends and Implementation for NLP-Based Test Creation

The NLP sector is experiencing rapid growth, reflecting massive investment in natural language alternatives across industries. In the testing domain specifically, adoption rates are accelerating dramatically as organizations recognize the efficiency gains.

Market forecasts indicate that 78% of testers already utilize AI to enhance productivity, with this percentage expected to increase as NLP capabilities mature. The convergence of large language models, transfer learning, and domain-specific training is making NLP-based debugging more accurate and versatile.

Advanced Capabilities on the Horizon

1. Zero-Code Test Orchestration

Future NLP platforms will enable product owners and stakeholders to author code audit and acceptance criteria that directly convert into executable test cases without any technical translation. This will further democratize quality assurance across organizations.

2. Unified Quality Governance

The traditional divide between technical and business teams will diminish as testing language becomes standardized through NLP. Shared vocabularies and natural language test cases will serve as both documentation and executable specifications.

3. Resilient Test Intelligence

Test assets will retain validity throughout application modernization and refactoring efforts because they capture intent rather than implementation details. This longevity transforms test creation from a recurring cost into a long-term asset.

4. Integration with Large Language Models

Large Language Models like GPT-4 are already generating test scripts by analyzing software documentation. As these models become more specialized for testing domains, their ability to understand context, identify edge cases, and suggest comprehensive AI-powered test scenarios will improve dramatically.

Research demonstrates that mutation-guided LLM-based test generation can uncover edge cases and detect compiler errors more effectively than manual approaches. By converting natural language descriptions into actionable test insights, LLMs enhance overall application reliability.

The combination of NLP for requirement understanding and LLMs for test generation creates powerful synergy. Teams can describe test-case generation needs in plain English while AI systems handle complexity of creating comprehensive, maintainable test suites.

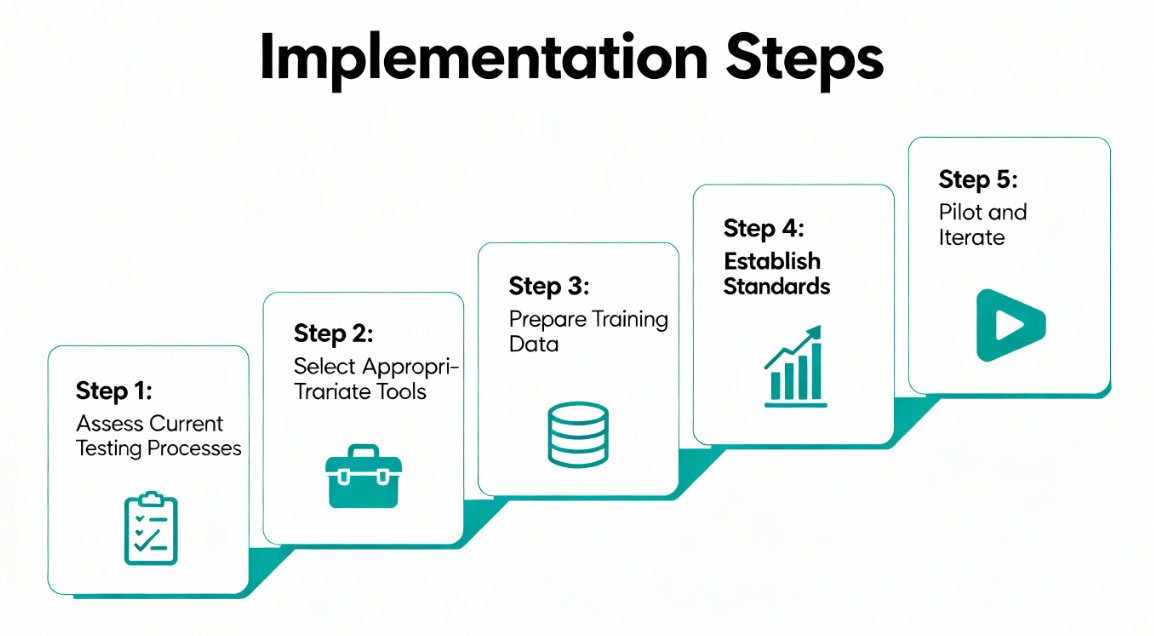

Implementation Steps

Step 1: Assess Current Testing Processes

Evaluate existing manual and automated testing workflows. Identify bottlenecks where NLP could provide immediate value, such as test case creation, maintenance, or requirement analysis.

Step 2: Select Appropriate Tools

Choose NLP-powered testing alternatives that align with your technology stack and organizational needs. Consider factors like mobile vs. web focus, integration capabilities, and level of technical expertise required.

Step 3: Prepare Training Data

Compile diverse datasets including user stories, acceptance criteria, and historical test cases. Quality training data improves NLP model accuracy and reduces ambiguity in test generation.

Step 4: Establish Standards

Create style guides and standardized terminology glossaries to ensure consistent requirement documentation. This reduces language complexity and improves NLP interpretation accuracy.

Step 5: Pilot and Iterate

Start with a limited scope project to validate NLP effectiveness in your environment. Gather feedback from technical and non-technical stakeholders, then expand based on lessons learned.

Best Practices for Success

- Provide Clear Context: When describing test scenarios in natural language, include specific objectives and expected outcomes. Detailed prompts generate more relevant and accurate test cases.

- Leverage Domain-Specific Language: Use industry-relevant terminology and scenarios familiar to your organization. This improves NLP accuracy and ensures generated tests reflect actual business requirements.

- Implement Continuous Feedback: Establish feedback loops where domain experts review generated tests and provide corrections. This continuous improvement enhances model performance over time.

- Combine Automated and Manual Review: While NLP automates test generation, human review ensures critical scenarios are covered and edge cases are addressed. Balance human efficiency with an additional, automated code review.

- Monitor and Optimize: Track accuracy, test diversity, and defect and duplication detection rates to measure NLP testing effectiveness. Use these metrics to refine prompts, update training data, and improve overall test quality.

Conclusion

NLP-based test creation represents a fundamental shift in how organizations approach QA. By bridging the gap between human intent and machine execution, these technologies enable faster development cycles, better test coverage, and more collaborative testing processes.

As platforms like Panto AI continue advancing the state of the art, the future of testing is increasingly accessible, intelligent, and aligned with business goals. Organizations that embrace NLP-powered debugging will gain competitive advantages through faster time-to-market and superior product quality.