Mobile app quality is critical because users quickly abandon apps that crash or frustrate them. Studies show that a majority of users uninstall an app within days if it performs poorly. To prevent this, QA managers rely on testing metrics — quantitative indicators of test coverage, defects, and user experience — to monitor and improve mobile app quality.

These metrics provide clarity on where to invest time, what to fix first, and how to measure the real impact of testing efforts.

Key Metrics for Mobile QA

Effective mobile QA is built on metrics that track quality from multiple angles. The most important ones fall into five categories:

1. Performance and Reliability Metrics

These measure the app’s stability and speed across devices and conditions.

- Crash Rate: The percentage of user sessions that end in a crash.

- ANR Rate: The rate of “Application Not Responding” errors or hangs.

- App Load Time: How long it takes for the app or its features to become usable.

High crash or ANR rates directly correlate with user drop-offs. Monitoring and reducing these ensures a smoother user and coding experience. Other related measures include API latency, frame rendering time, and battery or memory usage under load.

2. Coverage Metrics

Coverage metrics gauge how thoroughly testing addresses the app.

- Test Case Coverage: The percentage of features or requirements tested.

- Code Coverage: The proportion of code executed during tests.

- Device and OS Coverage: How many device models and OS versions are included in testing.

- Localization Coverage: Ensuring all supported languages, regions, and currencies are tested.

Because mobile ecosystems are so fragmented, missing coverage on key devices or OS versions can lead to critical issues that only appear for certain user groups.

3. Defect Metrics

Defect metrics quantify how many bugs are found — and how serious they are.

- Defect Density: Number of defects per module or per lines of code.

- Defect Leakage: Bugs missed in traditional QA that appear in production.

- Severity Index: Weighted measurement of how severe reported defects are.

- Fix Rate: The percentage of reported bugs resolved before release.

Tracking these helps identify weak spots in testing and development, as well as overall product stability trends.

These connect QA outcomes directly to how real users perceive the app.

4. User Experience Metrics

- App Store Rating and Reviews: Reflect overall satisfaction and usability.

- User Retention Rate: Measures how many users keep using the app over time.

- Conversion Rate: Tracks how many users complete important in-app goals (like purchases or sign-ups).

If ratings drop or retention declines, it often means something in performance or UX needs immediate attention.

5. ROI and Productivity Metrics

Finally, QA needs to demonstrate impact on delivery speed and efficiency.

- Release Velocity: Measures how fast new features move from development to production.

- Cost of Quality: Compares testing costs to the costs saved by finding bugs early.

- Test Execution Rate: Ratio of executed tests to planned ones.

- Automation Coverage: Percentage of total tests that are automated.

These metrics show whether technical debt is managed, and whether QA investments are paying off — through faster releases, fewer post-release incidents, and improved team productivity.

Making Metrics Actionable

Collecting metrics is just the first step. The real power lies in using them to guide action. QA teams should ask:

- “Do our crash rates affect retention?”

- “Are we testing the most-used devices and OS versions?”

- “Which parts of the app cause the most severe issues?”

Dashboards should emphasize user-centric and risk-based insights, not just vanity numbers like “tests run.” Meaningful metrics help prioritize fixes, optimize coverage, and communicate value to product and business stakeholders.

Challenges in Traditional Mobile QA

Mobile QA is more complex than web testing because of device fragmentation — thousands of Android models and multiple iOS versions. Testing everything manually is nearly impossible, and automated tests often become brittle. Small UI or OS updates can break hundreds of test scripts, leading to time-consuming maintenance instead of active testing.

This constant upkeep slows down delivery. Teams may have large AI-driven suites that look impressive on paper, but in reality, they miss critical user flows or fail on newer devices. As a result, testing becomes reactive instead of predictive, and metrics stop reflecting real user quality.

How AI-Driven QA Changes the Game

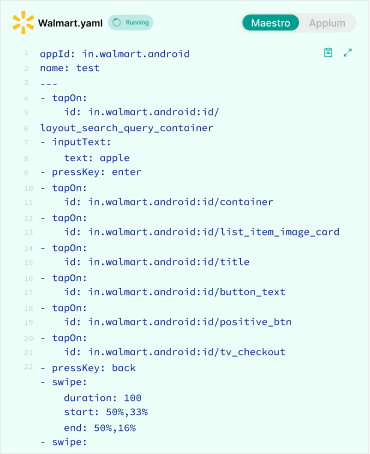

AI-powered QA testing is redefining mobile QA by automating everything from test creation to maintenance. Instead of relying on humans to write and update scripts, vibe debugging uses AI agents to diagnose and fix code issues through natural language conversations, rather than traditional breakpoints or manual inspection..

Here’s how AI-driven mobile QA improves traditional QA metrics:

1. Automated Test Generation

AI can generate test cases directly from natural-language requirements or user stories. This dramatically expands test coverage, including edge cases that human testers might overlook.

2. Intelligent Test Execution

AI-powered tools can execute tests on real devices or emulators at massive scale. They run parallel tests across hundreds of device and OS combinations in minutes, boosting both device coverage and test execution rate.

3. Self-Healing Automations

When a UI element changes, AI can detect it visually or contextually and automatically update the test script. This reduces test maintenance effort and keeps automation coverage consistent, even as the app evolves.

4. Smart Test Prioritization

AI analyzes code changes, historical failures, and risk factors to prioritize test runs. Critical paths like payments or authentication are tested first, ensuring faster detection of major issues.

5. Predictive Analytics

AI correlates test failures, code changes, and crash reports to predict where future defects might occur. These insights make metrics like defect density and leakage rate more meaningful — and actionable.

Together, these capabilities make QA faster, smarter, and more reliable — improving both the efficiency metrics (like time to execute tests) and quality metrics (like user satisfaction and defect rates).

Manual vs. AI-Driven Mobile QA: Which Finds More Bugs, Faster?

The effectiveness of a mobile QA strategy isn’t just about catching bugs — it’s about how quickly, accurately, and consistently those bugs are found across a fragmented device landscape. Traditional debugging has long been the backbone of quality assurance, but it struggles to keep pace with the speed and complexity of modern app development.

AI-driven mobile QA, on the other hand, leverages automation, reinforcement learning, and self-healing mechanisms to deliver deeper coverage, faster feedback, and smarter defect detection. The comparison below highlights how AI-driven QA outperforms manual testing across key dimensions like speed, accuracy, scalability, and cost-efficiency.

Table: Manual QA vs. AI-Driven QA

| Dimension | Manual QA | AI-Driven QA |

|---|---|---|

| Speed of defect detection | Slow and sequential. Testers must write, execute, and review test cases manually, often finding bugs late in the cycle. | Fast and continuous. AI-driven tests run automatically on every build, catching issues in real time with significantly shorter execution times. |

| Test coverage (code, device, scenario) | Limited by human capacity. QA teams can only test a small set of devices and scenarios due to time and resource limits. | Broad and systematic. AI generates and executes hundreds of tests in parallel across vast device and OS combinations, greatly improving coverage. |

| Scalability (devices & OS versions) | Poor scalability. Testing more devices or OS versions requires additional testers and physical hardware. | Highly scalable. Cloud-based AI testing runs on multiple devices and OS versions in parallel without extra human effort. |

| Accuracy in UI regressions | Manual testers can overlook small visual or layout shifts, leading to inconsistent regression checks. | AI visual testing compares screens pixel-by-pixel, detecting subtle UI regressions consistently and accurately. |

| Handling of edge-case flows | Depends on tester creativity and time; rare or complex user paths are often skipped or missed. | AI explores and generates diverse test scenarios, including edge cases, based on data patterns and user behavior analysis. |

| Maintenance effort (UI changes) | Very high. UI or workflow updates require manual rework of test steps, slowing down teams. | Low. AI tests self-heal when elements change, automatically adjusting locators and steps with minimal human input. |

| Time to execute full test suites | Long. Manual execution can take hours or days, delaying releases. | Short. AI-driven suites run in minutes using parallel execution across environments. |

| Frequency & reliability of runs | Infrequent and inconsistent. Manual runs depend on tester availability and can vary in accuracy. | Frequent and reliable. AI executes consistent tests automatically in continuous integration environments. |

| Cost-effectiveness over time | High ongoing costs as testing scales linearly with app complexity and team size. | High ROI. Initial setup costs are offset by long-term savings in time, labor, and reduced post-release defects. |

The Future: Panto AI’s Upcoming Mobile QA Agent

One of the most exciting developments in this space is Panto AI’s upcoming AI-driven mobile QA agent for mobile testing. Panto aims to make the entire QA process so seamless that a bot itself can navigate through new features, execute tests on real devices, and create test reports automatically.

The Panto AI agent will be designed to:

- Understand requirements in natural language or even generate test cases automatically based on organizational context.

- Execute those tests on both real devices and emulators at scale.

- Automate test runs across device matrices within seconds in the backend.

- Self-heal broken automations when UI elements change — remapping user journeys and providing feedback autonomously.

With this kind of intelligence, testing will shift from being a repetitive task to an adaptive, self-learning process. Unlike traditional QA teams, teams using Panto will be able to focus on strategy and innovation while the AI-driven mobile QA handles execution, maintenance, and reporting.

Testing metrics are the backbone of mobile QA, helping teams measure everything from reliability to user satisfaction. But as mobile ecosystems grow more complex, maintaining those metrics manually becomes unsustainable.

AI-driven mobile QA (aka vibe debugging) offers a new way forward — one that’s faster, more adaptive, and truly scalable. With upcoming innovations, we’re moving toward a future where testing is not just automated, but autonomous: intelligent systems that understand, execute, and optimize quality at every stage of the release cycle.