The software testing landscape is experiencing a seismic shift. Traditional QA, once heralded as the solution to quality assurance challenges, now struggles to keep pace with modern development velocities. The answer lies not in working harder, but in working smarter—through AI-powered testing.

AI-powered testing represents more than just an incremental improvement over conventional automation. By leveraging machine learning algorithms, natural language processing, and predictive analytics, AI-driven test automation delivers unprecedented efficiency, accuracy, and adaptability that manual and traditional automated approaches simply cannot match.

The market validates this transformation. The global AI-powered software testing tool market reached $3.4 billion in 2025 and projects to hit $6.4 billion by 2035, expanding at a compound annual growth rate of 6.6%. More telling than market size, however, is adoption velocity: AI testing adoption doubled from 7% in 2023 to 16% in 2025, with 68% of surveyed teams now utilizing AI-driven solutions for critical QA activities.

The AI Testing Revolution: Core Capabilities Transforming QA

Self-Healing Test Automation: The End of Maintenance Nightmares

Traditional automated tests break constantly. A simple button rename, UI restructuring, or element relocation sends test suites into failure cascades, demanding immediate manual intervention. Teams spend more time maintaining tests than creating new ones—a problem so pervasive that maintenance costs often exceed the original automation investment.

Self-healing test automation eliminates this bottleneck entirely. Using AI-powered algorithms, self-healing frameworks detect application changes in real-time and automatically update test scripts without human intervention. When an element identifier changes, the AI analyzes the UI, identifies alternative matching elements through pattern recognition, and dynamically updates locators—all while tests continue executing.

The impact is dramatic. Organizations implementing self-healing automation report 80-94% reductions in test maintenance efforts. One insurance company initially projected $340,000 in annual automation savings but instead faced $480,000 in maintenance costs with traditional tools—until adopting self-healing capabilities that reduced maintenance hours by 94%. Self-healing doesn’t just save time; it fundamentally changes the economics of test automation.

Self-healing systems operate through sophisticated workflows that combine real-time monitoring, error detection, AI-driven matching techniques, and continuous optimization through machine learning. As these systems process more test executions, they learn from patterns in element changes and failures, becoming increasingly accurate at predicting and adapting to modifications. The result is QA automation that remains effective even in rapidly evolving application environments.

AI-Driven Test Case Generation: From Hours to Seconds

Creating comprehensive test cases manually demands significant time and expertise. Testers must anticipate edge cases, consider user behavior patterns, and ensure adequate coverage—all while racing against aggressive release schedules. AI test case generation transforms this labor-intensive process into an automated, intelligent workflow.

Leveraging natural language processing and machine learning, AI systems analyze requirements documents, user stories, and application behavior to generate test scenarios dynamically. These AI-generated test cases aren’t simple replicas of manual tests—they actively identify edge cases and negative scenarios that human testers frequently overlook, significantly enhancing test coverage.

The efficiency gains are staggering. AI test case generation reduces creation time by over 90%, converting hours or days of manual effort into seconds-long automated processes. Teams can produce detailed test cases with clearly defined steps, preconditions, and expected results ready for immediate execution in both manual and automated testing workflows.

More importantly, automated QA demonstrates superior coverage. By analyzing historical data, code complexity, and user interaction patterns, AI identifies testing gaps that manual approaches miss. This comprehensive coverage translates directly into fewer production defects and higher software reliability.

Intelligent Defect Prediction: Catching Bugs Before They Happen

Reactive testing waits for defects to manifest before addressing them. Predictive testing, powered by AI analytics, forecasts where defects will occur before they impact production environments. This shift from detection to prediction represents one of AI’s most transformative contributions to quality assurance.

AI defect prediction models leverage machine learning techniques—regression analysis, decision trees, and neural networks—to analyze historical test data and identify code areas prone to failures. These models examine indicators like code churn, developer onboarding and activity, defect density, and test failure trends to predict high-risk modules requiring focused testing attention.

The accuracy of AI defect prediction is remarkable. Neural network models achieve 94.2% accuracy with 92.5% F-score in identifying defect-prone code, while Random Forest algorithms deliver 92.5% accuracy with superior explainability. Organizations implementing predictive analytics report 30% improvements in bug detection efficiency and 25% reductions in post-release defects.

Beyond accuracy, predictive analytics enables strategic resource allocation. By identifying high-risk areas proactively, QA teams can prioritize testing efforts where they matter most, increasing developer velocity by 15% while simultaneously decreasing defects by 25%. This data-driven approach transforms quality assurance from exhaustive testing to targeted, risk-based validation.

Visual Testing with AI: Beyond Pixel Comparisons

User interfaces define user experience, yet traditional visual testing relies on rigid pixel-to-pixel comparisons that generate excessive false positives and miss contextual inconsistencies. AI-powered visual testing transcends these limitations through computer vision and machine learning that understand visual content contextually, much like human perception.

AI visual testing systems employ self-learning algorithms that continuously improve based on past test results. Rather than flagging every minor pixel difference, these intelligent systems detect stylistic changes, layout shifts, and functional visual defects that actually impact user experience. Smart localization detection identifies visual issues in multi-language versions, while real-time feedback provides instant alerts during test execution.

The benefits extend beyond accuracy. Automated visual testing tools reduce visual noise, filtering out unnecessary minor changes to help testers focus on significant issues affecting UI consistency and functionality. The Visual Review Agent in advanced platforms enables teams to approve visual changes three times faster by streamlining review processes and reducing bottlenecks.

Cross-browser and cross-device consistency validation ensures uniform experiences across platforms without manual inspection overhead. Self-healing capabilities adapt to minor UI changes automatically, reducing the need for constant test script updates. Combined with comprehensive regression testing that automatically detects visual defects after updates, AI visual testing delivers pixel-perfect interfaces at DevOps velocity.

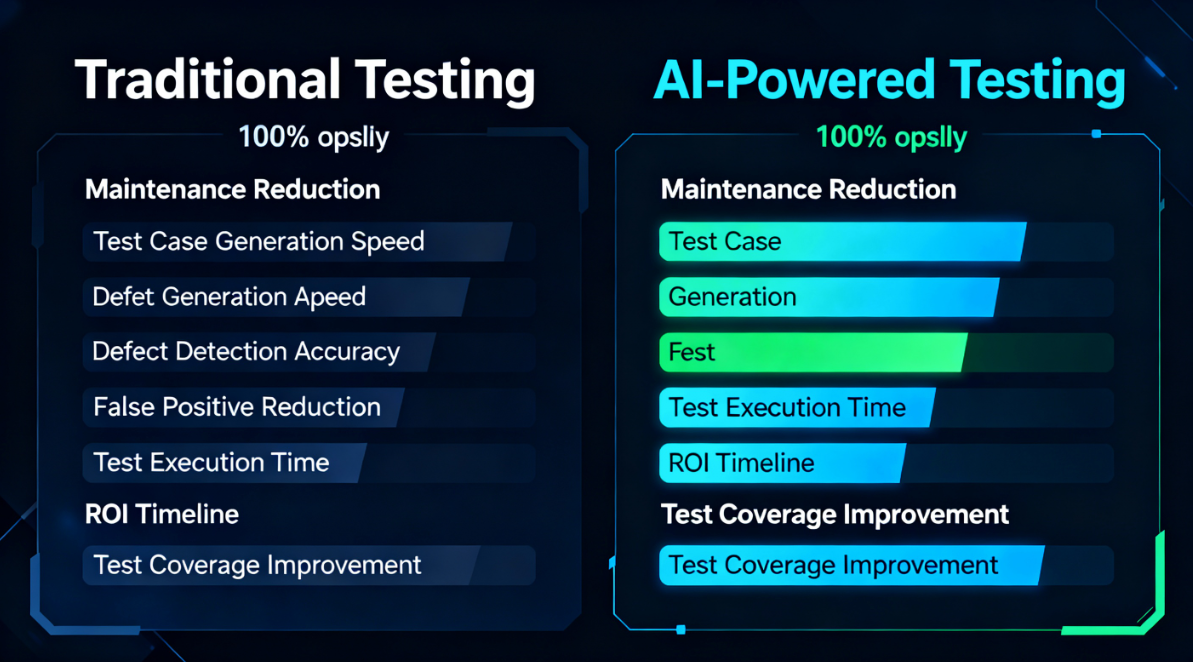

Traditional vs AI-Powered Testing: The Performance Gap

The quantitative differences between traditional and AI-powered testing approaches reveal why adoption accelerates across the industry:

| Metric | Traditional Testing | AI-Powered Testing |

|---|---|---|

| Test Maintenance Reduction | Manual updates required | 80-94% reduction |

| Test Case Generation Speed | Hours to days per test | Seconds to minutes |

| Defect Detection Accuracy | 60-70% accuracy | 92.5-94.2% accuracy |

| False Positive Reduction | High (20-30% false positives) | Up to 86% reduction |

| Test Execution Time | Baseline | 40-67% faster |

| ROI Timeline | 12+ months | 3-6 months |

| Test Coverage Improvement | Limited by manual effort | 30-50% increase |

These metrics illuminate why organizations rapidly transition to AI-driven approaches. The efficiency gains aren’t marginal—they’re transformative. Teams replacing 50% or more of manual testing with codeless QA AI automation report dramatic improvements in release velocity, defect detection, and testing cost efficiency.

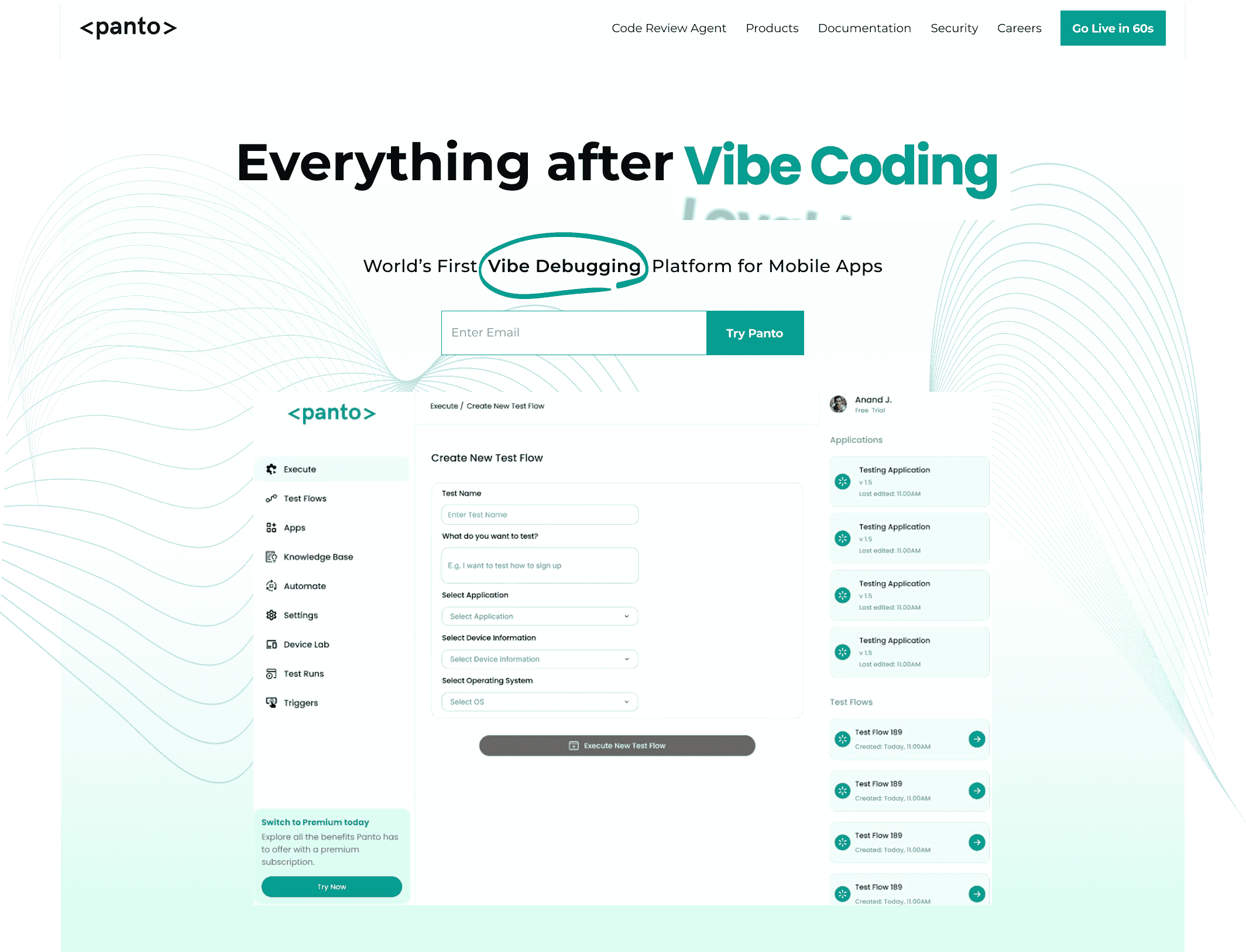

Panto AI: Pioneering the Future with End-to-End AI QA Agents

As quality assurance evolves toward intelligent, autonomous testing, Panto AI emerges at the forefront with the world’s first vibe debugging platform for mobile applications. Combining dynamic code reviews, comprehensive code security, and AI-powered QA automation, Panto AI delivers an integrated solution that addresses the complete testing lifecycle—from pull request analysis to production monitoring.

Panto AI’s end-to-end QA agent represents a quantum leap beyond traditional testing tools. The platform understands requirements in natural language and automatically generates test cases tailored to organizational context. Teams can describe test scenarios conversationally—”verify login flow works with social authentication” or “test checkout process with promo codes”—and Panto’s AI agent navigates through the application step-by-step, executing tests on real devices or emulators.

What distinguishes Panto AI is its self-healing automation that adapts when applications change. When UI updates occur, the agent automatically detects modifications, re-executes test flows, updates automation scripts, and notifies teams of changes—eliminating manual QA and script maintenance entirely. This proprietary framework operates language, OS, device, and testing tool agnostic, supporting deterministic script generation for Appium, Maestro, and custom formats.

Beyond execution, Panto AI provides comprehensive debugging reports with logs, videos, and screenshots delivered directly to Slack or centralized dashboards. Integration with CI/CD pipelines enables automated test triggering during deployments, while the platform’s knowledge base continuously learns from test executions, making subsequent runs faster and more intelligent. By unifying code review, security scanning, and codeless QA automation under a single AI-driven platform, Panto AI delivers the seamless quality feedback loop modern development teams require to ship confidently at velocity.

Implementing AI-Powered Testing: Strategic Considerations

Successful AI testing adoption requires thoughtful planning and incremental implementation. Organizations should begin by evaluating current QA testing maturity and identifying specific pain points where AI delivers maximum impact—test maintenance overhead, inadequate coverage, or slow defect detection.

Pilot projects provide valuable validation. Start with high-value use cases like regression testing or visual validation where AI benefits manifest quickly and measurably. Select AI-powered tools that integrate seamlessly with existing development workflows, version control systems, and CI/CD pipelines to minimize adoption friction.

Data quality determines AI effectiveness. Ensure comprehensive collection of test results, defect patterns, and application behavior data that machine learning models require for accurate predictions. Establish data validation mechanisms and consistent labeling protocols to maintain training data integrity.

Cultural readiness matters as much as technical capability. AI augments testers rather than replacing them, enabling focus on strategic activities like exploratory testing, test strategy, and business alignment. Invest in upskilling teams on AI tool capabilities, interpreting AI-generated insights, and leveraging predictive analytics for decision-making.

Measure impact continuously through meaningful metrics: defect detection rates, test maintenance hours, test execution velocity, and ultimately, production incident reduction. Track ROI quantitatively to demonstrate value to stakeholders and justify continued investment in intelligent testing infrastructure.

The Future of Quality Assurance

AI-powered testing isn’t merely a trend—it represents the inevitable evolution of software quality assurance. As applications grow more complex, release cycles accelerate, and user expectations intensify, manual and traditional automated testing approaches reach fundamental limitations. AI transcends these boundaries through capabilities that learn, adapt, and predict in ways human testers and rule-based automation cannot.

The organizations thriving in 2025 and beyond aren’t those testing harder—they’re those testing smarter through artificial intelligence. Self-healing automation eliminates maintenance overhead. AI QA automation ensures comprehensive coverage. Predictive analytics identify risks before they manifest. Visual AI validates interfaces contextually. Together, these capabilities forge a quality assurance approach that scales with modern software development velocity.

The question facing development teams isn’t whether to adopt AI-powered testing, but how quickly they can implement it to remain competitive. With proven ROI timelines of 3-6 months, 80-94% maintenance reductions, and 30% defect detection improvements, AI testing delivers measurable value almost immediately. Platforms like Panto AI demonstrate that integrated, end-to-end AI QA solutions are available today, ready to transform how teams deliver reliable software at unprecedented speed.

The future of debugging is intelligent, adaptive, and autonomous. Organizations embracing AI-powered testing position themselves not just to survive the accelerating pace of software development, but to lead it. The tools exist. The benefits are proven. The time to act is now.

Panto AI is built on the belief that open source drives global innovation. The platform is completely free for open-source projects, offering unlimited pull request reviews to help developers maintain high-quality, reliable code.

Whether it’s a small personal project or a widely used library, Panto AI helps teams deliver bug-free and production-ready code at no cost.