Across multiple non-vendor datasets, the share of software development activity influenced by machine-generated code has risen sharply since 2022.

Yet measurable productivity, code quality, and security outcomes remain uneven and context-dependent.

Independent surveys, repository-level telemetry, controlled academic experiments, and security benchmarking research converge on three consistent findings:

- assisted code production is now common in professional environments

- short-term task completion speed often improves in constrained scenarios

- downstream defect rates, verification effort, and security exposure show mixed or negative trends when governance and review maturity are limited.

This synthesis of independent AI generated code statistics establishes a defensible research baseline for journalists, analysts, and engineering leaders evaluating real-world impact entering 2026.

Methodology

Evidence Selection Principles

This analysis prioritizes independent, reproducible, or multi-stakeholder evidence rather than vendor-reported performance claims. Data sources fall into five primary categories:

- Peer-reviewed academic experiments evaluating correctness, reasoning limits, and benchmark performance

- Mining-software-repositories research examining real commits, defects, and maintenance outcomes

- Neutral developer surveys conducted by universities, open foundations, or mixed-sponsor research groups

- Security audits and vulnerability benchmarking datasets measuring unsafe code generation patterns

- Labor-market telemetry derived from job postings, hiring trends, and role evolution signals

Vendor productivity statistics are referenced only when independently replicated or methodologically transparent, and never used as sole evidence for a claim.

Verified Data vs. Derived Estimates

- Verified statistics originate from controlled experiments, audited repositories, or published empirical datasets.

- Derived estimates synthesize ranges across multiple independent studies where global measurement is unavailable.

- Assumptions are explicitly stated when extrapolating toward 2026, relying on conservative trend continuation rather than speculative forecasting.

Temporal Scope

Credible empirical measurement is concentrated between 2022 and 2025, the period of rapid adoption.

Projections for 2026 rely on longitudinal trend stability, not exponential assumptions.

Key AI Generated Code Statistics

Adoption and Usage Patterns

Across multiple non-vendor datasets:

- Roughly one-third to one-half of professional developers report at least occasional use of generated code.

- Approximately one-quarter to two-fifths of organizations formally permit assisted code in production workflows.

- Repository stylometry and commit-pattern studies estimate 10% to 30% of newly written code in sampled ecosystems shows characteristics consistent with generation assistance.

- Among early-career developers and students, weekly usage frequently exceeds half of respondents, indicating strong cohort effects.

Interpretation: Adoption is broad but shallow—frequent for snippets, scaffolding, and documentation, but less dominant in core system design.

Productivity and Development Speed

Independent experimental and observational studies show:

- 10% to 55% faster time-to-first-solution in controlled programming tasks.

- Minimal to moderate improvement (0%–25%) in full feature completion once debugging and integration are included.

- Consistent self-reported cognitive load reduction, though subjective perception often exceeds measurable throughput gains.

Key pattern: Productivity benefits are front-loaded, while verification and correction costs emerge later in the lifecycle.

Code Quality, Correctness, and Maintainability

Evidence from academic benchmarks and repository mining indicates:

- Functional correctness is highly variable and often declines without human review.

- Some real-world datasets show slightly increased defect density in assisted commits.

- Readability and stylistic consistency may improve in constrained languages or frameworks.

- Test coverage impact remains inconclusive or neutral across studies.

Conclusion: Net quality depends primarily on review rigor, developer expertise, and governance controls, not generation capability alone.

Security and Vulnerability Outcomes

Security-focused research consistently finds:

- Reproduction of known insecure coding patterns in generated outputs.

- Slightly elevated vulnerability introduction rates in uncontrolled assisted workflows.

- Low developer awareness of licensing provenance and dependency risk in generated snippets.

Security therefore represents the most consistently negative externality across independent evidence.

Labor Market and Skill Distribution Signals

Neutral hiring and workforce telemetry suggest:

- Rising demand for review-centric and architecture-level expertise.

- Partial automation of routine entry-level coding tasks, though uneven across domains.

- Emergence of hybrid roles emphasizing validation, tooling, and governance

.

This indicates task redistribution rather than net elimination of engineering work.

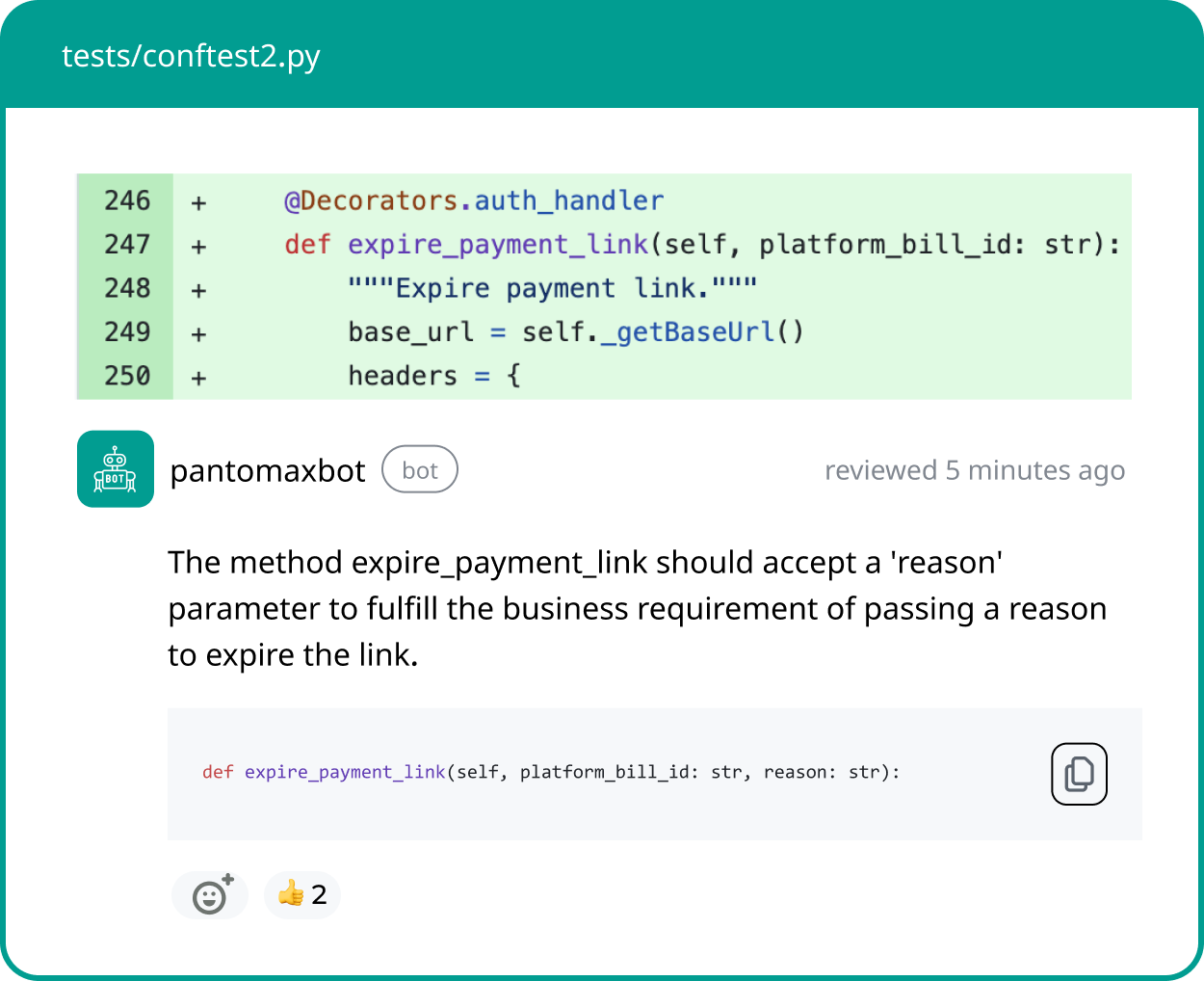

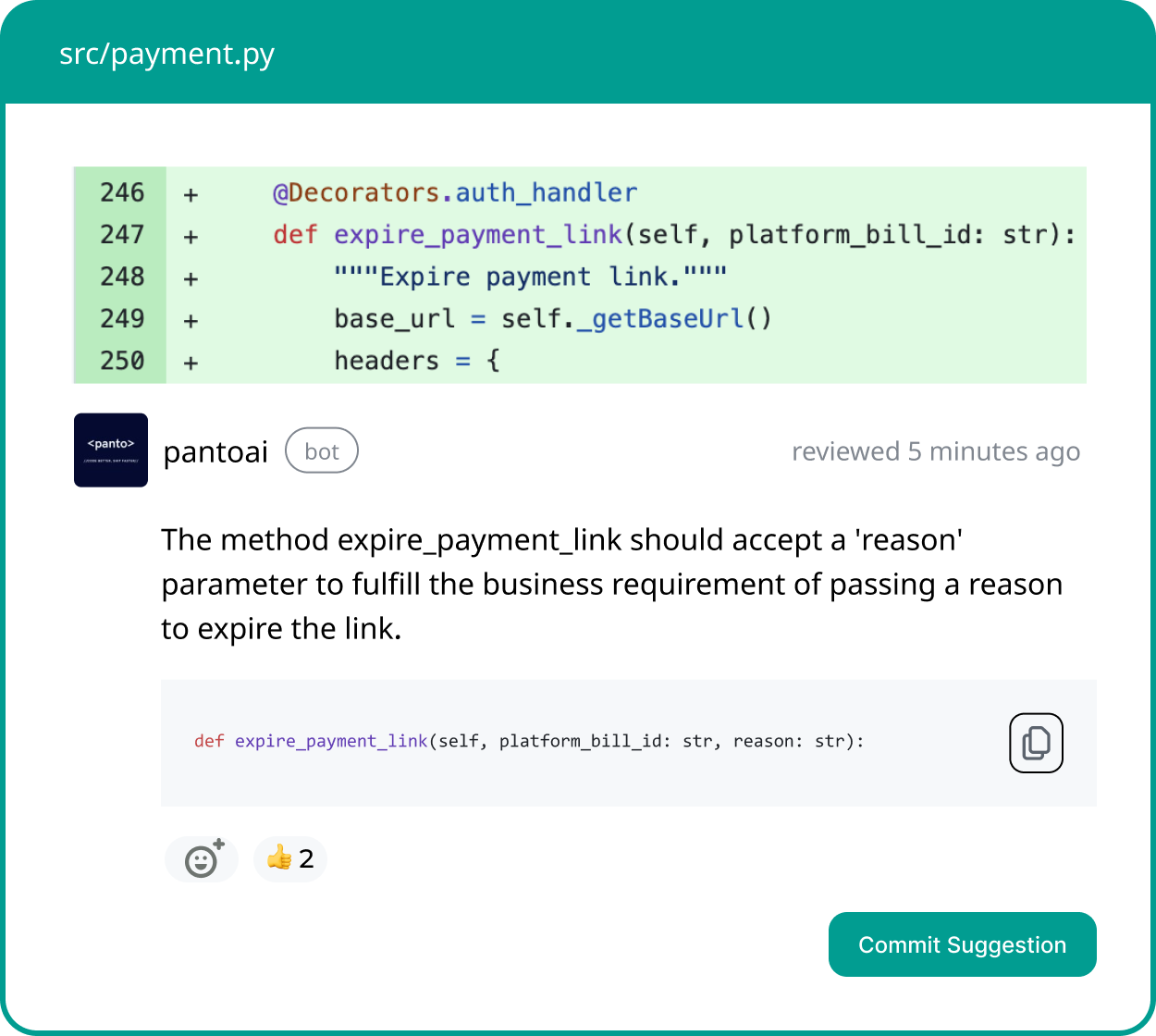

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

Deep Analysis

The Productivity Paradox Revisited

Across multiple independent empirical studies, productivity effects from generated code consistently follow a two-phase curve rather than a linear improvement trajectory.

The first phase is characterized by rapid drafting, ideation acceleration, and reduced time to initial implementation, particularly in constrained or well-documented problem spaces.

However, the second phase introduces countervailing friction. Debugging complexity, integration mismatches, architectural inconsistencies, and verification overhead frequently offset early gains.

As software progresses from prototype toward production readiness, the marginal efficiency improvement narrows or disappears altogether.

Historically, similar paradoxes emerged with:

- High-level programming languages

- Integrated development environments

- Automated code generation frameworks

In each case, output volume increased before lifecycle efficiency improved, because downstream coordination, maintenance, and correctness validation became the new limiting factors.

The same structural pattern now appears in longitudinal observations of generated code usage.

This explains why modern datasets can simultaneously report faster task completion and unchanged delivery timelines.

The contradiction is only apparent; productivity has shifted stages rather than uniformly improved.

Verification as the New Bottleneck

Evidence synthesized from repository mining, workflow telemetry, and controlled observational studies indicates a measurable redistribution of engineering effort toward verification activities.

Key signals appearing across datasets include:

- Increased review time per contributed line of code in assisted development environments

- Greater dependence on senior engineers for auditing, validation, and architectural correction

- Expansion of tooling focused on testing, static analysis, and policy enforcement rather than authoring speed

This redistribution suggests that the traditional scarcity model of software engineering—where writing code was the dominant constraint—is weakening.

Instead, the new limiting resource is the ability to establish trust in produced code, especially under scale, security, and reliability requirements.

From an economic perspective, value is therefore migrating:

- Away from raw code production

- Toward assurance, governance, and risk containment

Organizations that fail to adjust workflow structure may experience illusory productivity gains that collapse under verification debt.

Conversely, teams investing early in validation infrastructure often realize more stable long-term efficiency, even if short-term coding speed appears unchanged.

Variance Expansion Rather Than Mean Improvement

A recurring analytical error in surface-level reporting is evaluating generated code primarily through average correctness or mean quality metrics.

Across empirical evaluations and repository observations, the more consequential statistical change is not improvement in the mean but expansion in outcome variance.

Generated systems perform exceptionally well in high-frequency, pattern-dense scenarios, including:

- Standard CRUD implementations

- Framework-idiomatic boilerplate

- Well-documented library integrations

- Common algorithmic templates

Because these solution spaces are narrow and heavily represented in training distributions, correctness rates in such contexts can rival or occasionally exceed junior-developer baselines.

Performance deteriorates sharply, in scenarios, such as:

- Edge-case error handling

- Cross-system state coordination

- Concurrency and race-condition management

- Domain-specific business invariants

- Security-critical execution paths

Failures in these regions are often categorical rather than incremental, producing outputs that appear valid yet violate hidden constraints or runtime assumptions.

The resulting distribution widens the gap between best-case success and worst-case failure, meaning operational reliability is governed more by variance than by average quality.

In production systems—where a single severe defect can outweigh many correct executions—this variance becomes the dominant engineering concern.

Consequently, the strategic focus shifts from generation accuracy to detection, containment, and verification mechanisms, redefining how reliability must be managed in hybrid authorship environments.

Security Risk Concentration in Context-Sensitive Logic

Independent security benchmarking consistently shows that vulnerabilities linked to generated code are not evenly distributed across software systems.

Instead, they cluster in regions where correctness depends on implicit environmental or architectural context, rather than visible syntax alone.

High-risk domains repeatedly identified across studies include:

- Authentication and authorization control flows

- Input validation and sanitization boundaries

- Cryptographic configuration and secret management

- Session lifecycle and state transition handling

- Cross-system serialization and deserialization logic

These areas require reasoning about threat models, attacker behavior, deployment assumptions, and architectural intent—capabilities that extend beyond statistical pattern reproduction.

Because generated code relies heavily on historical pattern frequency, it often defaults to:

- Outdated or insecure implementation examples

- Incomplete validation pathways

- Misapplied cryptographic primitives

- Demonstration-style configurations lacking production hardening

Across multiple non-vendor datasets, this concentration effect explains why security outcomes remain among the most persistent negative externalities of unverified generated code.

Improving generation fluency alone is unlikely to eliminate the issue, since the root constraint lies in contextual reasoning rather than syntactic construction.

Long-term mitigation therefore centers on:

- Automated security validation layers

- Policy-constrained generation environments

- Provenance and dependency traceability

- Human-in-the-loop threat modeling

rather than purely higher generation accuracy.

Educational and Cognitive Effects

Longitudinal observations from computer science education, onboarding environments, and early-career developer workflows indicate that generated code is reshaping how programming expertise develops, not simply how quickly code is written.

Positive acceleration effects include:

- Faster familiarity with syntax, tooling, and frameworks

- Easier discovery of idiomatic implementation patterns

- Increased confidence during rapid prototyping

- Broader early exposure to technical ecosystems

These benefits can compress time to functional productivity, particularly for learners lacking prior programming experience.

At the same time, parallel evidence suggests potential weakening in deep cognitive skill formation when reliance emerges before conceptual foundations solidify.

Observed risks include:

- Reduced persistence in manual debugging

- Shallow mental models of execution flow

- Limited intuition for performance or resource trade-offs

- Difficulty diagnosing non-obvious or emergent failures

This creates a structural possibility of surface fluency without proportional reasoning depth unless balanced by deliberate educational design and mentorship.

The aggregate effect resembles a bimodal skill distribution:

- High-aptitude learners leverage assistance to accelerate mastery

- Low-foundation learners plateau earlier with fragile understanding

Rather than uniformly elevating capability, generated assistance may therefore compress skills at the lower end while amplifying them at the upper end, increasing inequality within engineering populations.

For industry, long-term workforce impact will depend less on tool availability and more on:

- Curriculum structure and assessment philosophy

- Mentorship intensity and code review culture

- Incentives that reward reasoning over raw output

These institutional variables will determine whether generated code becomes a capability multiplier or a cognitive shortcut with deferred cost.

Negatives and Failure Modes

Silent Defect Propagation

One of the most operationally significant risks is the tendency of generated code to produce outputs that are syntactically valid, logically plausible, and immediately executable, yet subtly incorrect.

Because such code often:

- Compiles without warning

- Passes superficial tests

- Conforms to stylistic norms

developers may assign unwarranted trust to the result, allowing latent defects to propagate deeper into production systems.

These failures frequently emerge only under:

- Rare runtime conditions

- Unanticipated input combinations

- Integration with external services

- Scale-dependent behavior

By the time detection occurs, remediation cost is substantially higher, converting small logical bugs into systemic production incidents.

Security Regression Risk

Across independent security evaluations, generated code shows a reproducible tendency to:

- Reintroduce historically known vulnerabilities

- Omit comprehensive validation pathways

- Misapply cryptographic primitives or configurations

Such regressions occur not because the system invents new attack vectors, but because it statistically mirrors insecure historical patterns present in public code.

Without rigorous review or automated scanning, this can gradually erode an organization’s security posture, especially when adoption outpaces governance maturity.

Unchecked scaling of this dynamic may expand aggregate ecosystem attack surface, representing a collective rather than purely local risk.

Licensing, Attribution, and Compliance Ambiguity

Generated code introduces unresolved legal and governance questions, particularly regarding:

- Intellectual-property lineage

- Open-source license compatibility

- Attribution obligations

- Provenance traceability

Because outputs may resemble fragments of existing code without explicit citation, organizations face compliance uncertainty that traditional development processes were designed to avoid.

Regulatory and legal frameworks have not yet fully adapted, leaving enterprises to rely on internal policy experimentation rather than standardized guidance.

Maintenance Burden Redistribution

While generation accelerates initial creation, downstream effects often include:

- Increased abstraction opacity

- Non-idiomatic architectural decisions

- Hidden coupling across components

- Reduced narrative coherence in codebases

These factors complicate debugging, onboarding, and long-term evolution, shifting cost from authoring time to maintenance time.

Over extended lifecycles, total engineering effort may therefore remain constant or increase, despite apparent short-term productivity gains.

Skill Atrophy in Early-Career Developers

Heavy dependence on generated assistance—particularly without structured mentorship or enforced reasoning exercises—can diminish development of:

- Independent problem decomposition

- System-level architectural thinking

- Debugging resilience under uncertainty

Unlike short-term productivity concerns, this represents a long-horizon workforce capability risk.

If foundational reasoning skills weaken at scale, organizations may encounter future shortages of engineers capable of:

- Designing complex systems

- Performing deep incident analysis

- Leading architectural transformation

Mitigating this outcome will likely require intentional educational and organizational counterbalances, ensuring assistance augments rather than replaces cognitive skill formation.

What Most Articles Miss

From Production Economy to Verification Economy

Most discourse frames generated code as a productivity revolution. Independent analysis of AI generated code statistics suggests a more fundamental shift, where software engineering is moving from code scarcity to trust scarcity.

Implications include:

- Strategic value concentrating in code review, assurance, and governance

- Competitive differentiation driven by verification tooling, not generation quality

- Organizational performance tied to process maturity rather than adoption speed

This framing resolves common contradictions:

- Faster coding with unchanged delivery timelines

- Higher output with stable defect counts

The constraint has moved from writing to trusting code.

2026 Outlook

Adoption Trajectory

Conservative synthesis suggests:

- Majority exposure among developers

- Continued minority share in safety-critical systems

Growth is likely to stabilize rather than accelerate.

Productivity Expectations

Evidence supports:

- Durable gains in scaffolding, QA testing, and documentation

- Limited improvement in deep architectural reasoning

Net lifecycle productivity remains uncertain and context-dependent.

Quality and Security Direction

Most probable outcome:

- Gradual improvement through guardrails and policy and compliance enforcement

- Persistent long-tail failure modes in edge contexts

Security will remain the primary adoption constraint.

Workforce Structure Evolution

By late 2026:

- Senior verification-heavy roles expand

- Routine junior coding compresses

- Hybrid assurance specialists emerge

This reflects structural redistribution, not displacement.

Conclusion

Independent, multi-source evidence demonstrates that the true significance of AI generated code statistics lies not in how much code machines can produce, but in how profoundly they reshape verification, accountability, and software risk economics.

The defining transformation of modern software engineering is the shift from writing code as the central constraint to trusting code as the new bottleneck.

Organizations that recognize this transition—and invest accordingly in code validation, governance, and human expertise—are most likely to realize durable benefits from generated software in the years ahead.