AI coding assistants are now mainstream developer tools. By 2025, a majority of professional developers use AI in their daily work, and controlled experiments show large task-level speedups. Yet organizational results are inconsistent.

Many teams report faster coding but little improvement in delivery velocity or business outcomes.

This article synthesizes independent surveys, controlled experiments, enterprise analytics, and security research to answer a core question:

Does AI coding actually improve productivity at the organizational level, or does it only make individual developers feel faster?

Definition: AI Coding Productivity

AI coding productivity refers to the measurable impact of AI assisted development tools on software delivery.

It includes task completion speed, code quality, review effort, security outcomes, and end-to-end delivery metrics such as lead time and change failure rate.

Critically, productivity is not synonymous with typing speed or lines of code. True productivity is the rate at which high-quality software creates business value.

Methods and Evidence Base

This analysis weights independent and audited sources over vendor claims. Evidence includes:

- Large developer surveys measuring adoption and sentiment

- Controlled experiments and randomized trials

- Engineering analytics from instrumented enterprise teams

- Independent security research on AI generated code

Where direct measurement is unavailable, conservative triangulation is used and explicitly labeled.

Adoption in 2025: AI Is No Longer Optional

AI coding tools moved from novelty to default.

- Approximately 84 percent of developers report using or planning to use AI tools.

- 51 percent of professional developers use them daily.

- Adoption is highest for frontend, scripting, and test generation tasks.

Usage intensity varies, but the direction is clear: AI assistance is now part of the standard developer workflow.

Key takeaway: Adoption is no longer the differentiator. Outcomes are.

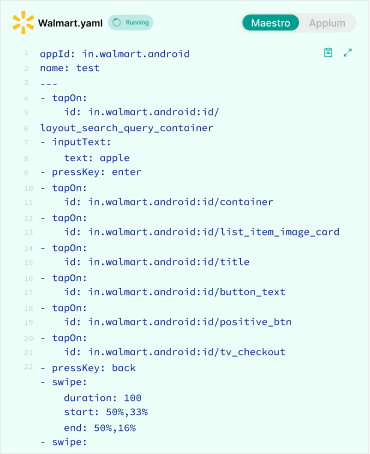

What Controlled Experiments Actually Prove

Controlled lab experiments provide the cleanest causal evidence.

One widely cited experiment found developers completed a representative coding task 55 percent faster with AI assistance. Similar experiments replicate large speedups for scoped tasks.

Important constraints:

- Tasks are short and well defined

- Integration, review, and deployment are excluded

- Results measure task speed, not system throughput

Conclusion: AI reliably reduces low-level friction such as syntax recall, boilerplate generation, and API lookup.

Field Studies: Why Organizational Gains Are Inconsistent

When AI tools are deployed across real teams, results diverge.

Enterprise analytics and randomized trials show:

- Some teams improve throughput

- Many teams see negligible change

- A subset experiences quality regressions that offset speed gains

Why This Happens

- Measurement mismatch

Teams track perceived speed instead of delivery metrics. - Bottleneck migration

Faster coding shifts load to reviews, QA testing, and integration. - Rework costs

AI generated code can introduce subtle defects that increase downstream work.

Security and Quality Tradeoffs

Independent security research highlights real risks:

- AI suggestions may reproduce insecure patterns

- Validation logic and error handling are often incomplete

- Code is committed faster than security review capacity grows

Net effect: verification burden increases until tooling and governance mature.

Key takeaway: AI accelerates both value creation and risk creation.

Perception vs Reality: The Productivity Paradox

Surveys show developers feel more productive and satisfied when using AI tools. However, organizational metrics often lag.

This perception gap matters:

- Leaders may overinvest based on sentiment

- Teams may optimize for speed instead of outcomes

Without instrumentation, perceived productivity becomes a misleading signal.

The Metrics That Actually Determine AI Coding ROI

Stop using raw output metrics. Use a balanced system.

| Metric Category | Recommended Measures | Why It Matters |

| Flow | Lead time, deployment frequency | Captures delivery speed |

| Quality | Post-release defects, security findings | Measures hidden cost |

| Review | PR size, review time | Shows reviewer load |

| Experience | Time in flow, blocker resolution | Indicates sustainability |

| Business | Time to market, revenue impact | Ties work to value |

Key takeaway: AI improves productivity only when these metrics move together.

Comparison: Individual Speed vs Organizational Throughput

| Dimension | Individual Productivity | Organizational Productivity |

| Primary driver | Coding speed | Flow efficiency |

| AI impact | High | Conditional |

| Failure mode | Overconfidence | Bottleneck amplification |

| Success factor | Task assistance | Process redesign |

Governance Practices That Capture Net Value

Organizations that realize gains implement controls:

- Automated testing gates with higher assertion coverage

- Security and secret scans tuned for AI failure patterns

- PR size caps and paired reviews for AI heavy changes

- Prompt templates favoring minimal, secure outputs

- Training on AI failure modes

- Incentives aligned to code quality, not output volume

Role-Specific Effects

- Junior developers gain the most speed but require oversight

- Mid-level developers benefit in integration and debugging

- Senior engineers gain indirectly through leverage and review efficiency

This shift changes role expectations and hiring signals.

Forecast to 2026

Evidence-based expectations:

- Tool quality improves, reducing verification overhead

- Measurement maturity becomes the differentiator

- Organizations reward judgment and systems thinking over raw output

Risk remains for teams that adopt tools without governance.

Key Takeaways

- AI coding tools increase individual speed reliably

- Organizational gains require process and metric changes

- Security and code quality costs are real and measurable

- Measurement, not adoption, determines ROI

Conclusion

AI coding assistants are now standard tools. Controlled experiments prove task-level speedups. Field evidence shows that without changes in measurement and governance, those gains rarely translate into business outcomes.

Organizations that instrument delivery metrics, strengthen review and security practices, and retrain teams will convert AI driven speed into durable productivity in 2026.

Suggested Next Step

Before expanding AI usage, baseline delivery and quality metrics for one quarter, then pilot AI tools with explicit governance and review constraints.