AI coding assistants have moved decisively from experimentation to standard practice. By 2026, they are no longer “emerging tools” but core components of modern software development workflows.

For engineering leaders, CTOs, and senior developers evaluating AI-assisted development, raw adoption numbers are not enough. What matters is how these tools affect productivity, code review and quality, trust, and enterprise outcomes at scale.

This article consolidates the most reliable AI coding assistant statistics from global developer surveys, enterprise adoption data, and productivity studies to answer three critical questions:

- How widely are AI coding assistants used today?

- What measurable productivity and delivery gains do they provide?

- Where do trust, quality, and code governance challenges still limit ROI?

What Is an AI Coding Assistant?

An AI coding assistant is a developer tool that uses large language models (LLMs) to generate, autocomplete, refactor, or explain code inside an IDE or code editor.

Examples include GitHub Copilot, Amazon CodeWhisperer, Tabnine, Replit AI, and similar tools integrated into VS Code, JetBrains IDEs, and cloud development environments.

These tools are designed to:

- Accelerate code authoring

- Reduce repetitive work

- Support learning and onboarding

- Assist with debugging and documentation

Key AI Coding Assistant Statistics (2026 Snapshot)

At a glance:

- 80–85% of developers now use AI coding assistants regularly

- 78% report productivity improvements

- Developers save ~3.6 hours per week on average

- 90% of Fortune 100 companies use AI coding tools

- Only ~33% fully trust AI-generated code

- AI-generated code shows 1.7× more defects without proper code review

These numbers reveal a clear pattern: adoption and productivity gains are strong, but trust and quality remain the gating factors for enterprise value.

Developer Adoption and Usage

AI Coding Assistants Are Now Mainstream

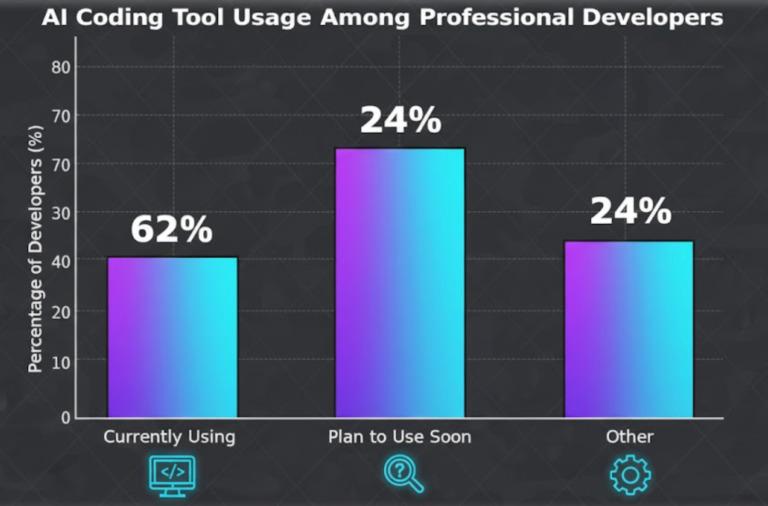

Global developer surveys consistently show that AI coding assistants are no longer optional tools:

- JetBrains (2025):

- Stack Overflow Global Survey:

- ~84% of respondents use or plan to use AI tools

- Among professional developers, ~51% use AI tools daily

Only about 15% of developers worldwide have not adopted any AI coding assistant. These surveys span tens of thousands of developers across 177–194 countries, indicating near-universal global penetration.

Multi-Tool Usage Is Common

AI coding is not dominated by a single tool:

- 59% of developers use three or more AI coding tools weekly

- Common combinations include Copilot, CodeWhisperer, Tabnine, Replit, and IDE-native assistants

Younger developers and full-stack engineers show the highest adoption rates, often using AI for:

- Learning unfamiliar languages or frameworks

- Rapid prototyping

- Boilerplate generation

Productivity Impact and Developer Satisfaction

Measured Productivity Gains

AI coding assistants deliver consistent, quantifiable efficiency improvements:

- 78% of developers say AI tools improve productivity

- Average time saved: ~3.6 hours per developer per week

- Daily users: ~4.1 hours saved

- Weekly users: ~3.5 hours saved

Analytics-based studies reinforce self-reported gains. DX Insight data from 51,000+ developers shows:

- Daily AI users merge ~60% more pull requests than occasional users, reducing merge time significantly

- Median output:

- Daily AI users: ~2.3 PRs/week

- Light users: ~1.4–1.8 PRs/week

Speed vs. Delivery Reality

While individual developers work faster, organizational gains depend on process maturity. Teams see the largest benefits when AI usage is paired with:

- AI-assisted code review

- Automated testing

- Clear governance for AI-generated code

Without these adaptations, faster code creation can simply shift bottlenecks downstream.

Developer Experience

Beyond throughput:

- 57% of developers say AI makes their work more enjoyable

- Only ~20% report increased burnout

This suggests AI assistants often reduce cognitive load rather than increase it—when used responsibly.

Trust and Code Quality Trade-Offs

The Trust Gap Remains Significant

Despite productivity gains, developer trust in AI-generated code remains limited:

- Only ~33% of developers fully trust AI outputs

- 46–76% express partial or full mistrust

- In Stack Overflow’s survey:

- 46% actively distrust AI results

- 76% say they do not fully trust AI-generated code

This skepticism directly influences how teams integrate AI into production workflows.

Code Quality and Security Concerns

Empirical studies validate these concerns:

- AI-generated code contains:

- ~1.7× more defects overall

- Up to 2.7× more security vulnerabilities

- 45% of developers report debugging AI-generated code takes longer than writing code manually

- 66% struggle with AI outputs that are “almost correct” but flawed

As a result, most teams mandate manual review of AI-generated code, reducing net efficiency gains if not handled systematically.

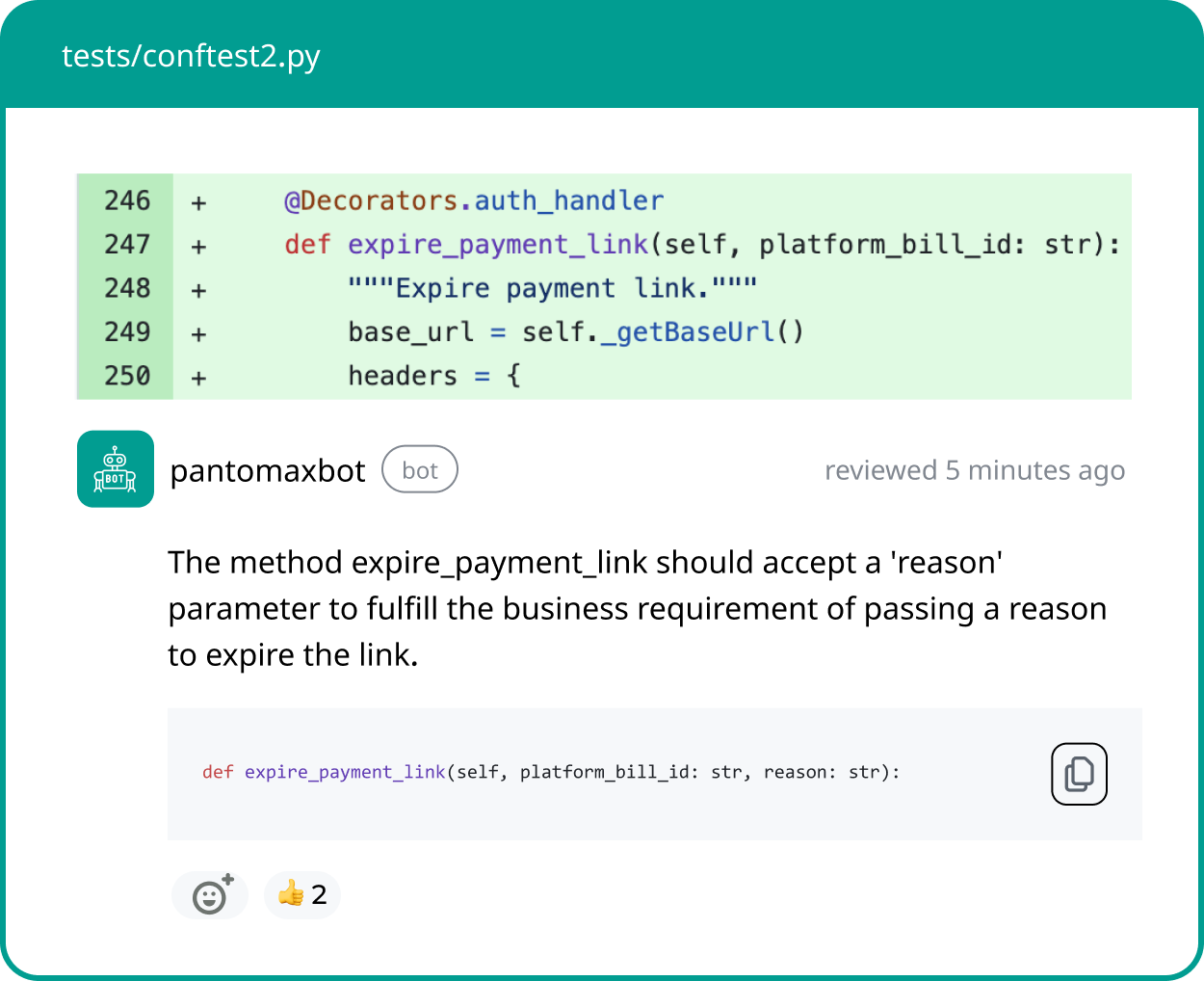

Mitigation Strategies That Work

Data shows quality improves when AI is embedded into governance:

- Teams using AI-assisted code review report 35% higher quality improvements

- Successful organizations treat AI as:

- A first-draft generator

- Not a final authority

Enterprise and Industry Adoption

AI Coding at Enterprise Scale

Enterprise uptake is both deep and broad:

- GitHub Copilot:

- 20+ million users (mid-2025)

- Used by 90% of Fortune 100 companies

- Enterprise deployments growing ~75% QoQ in 2025

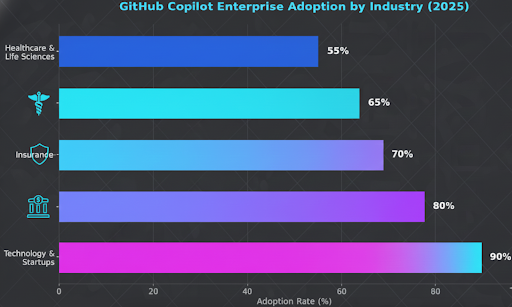

Across industries:

- ~90% of technology firms

- ~80% of banking and finance teams

- ~70% of insurance organizations

- ~50–65% of retail and healthcare enterprises

Overall, 91% of engineering organizations have adopted at least one AI coding tool.

Why Enterprises Adopt Despite Trust Issues

Enterprises accept AI trade-offs because:

- Developer productivity gains are material

- Talent shortages persist

- Time-to-market pressures continue to increase

However, adoption is increasingly paired with:

- Security reviews

- Policy enforcement

- Model governance controls

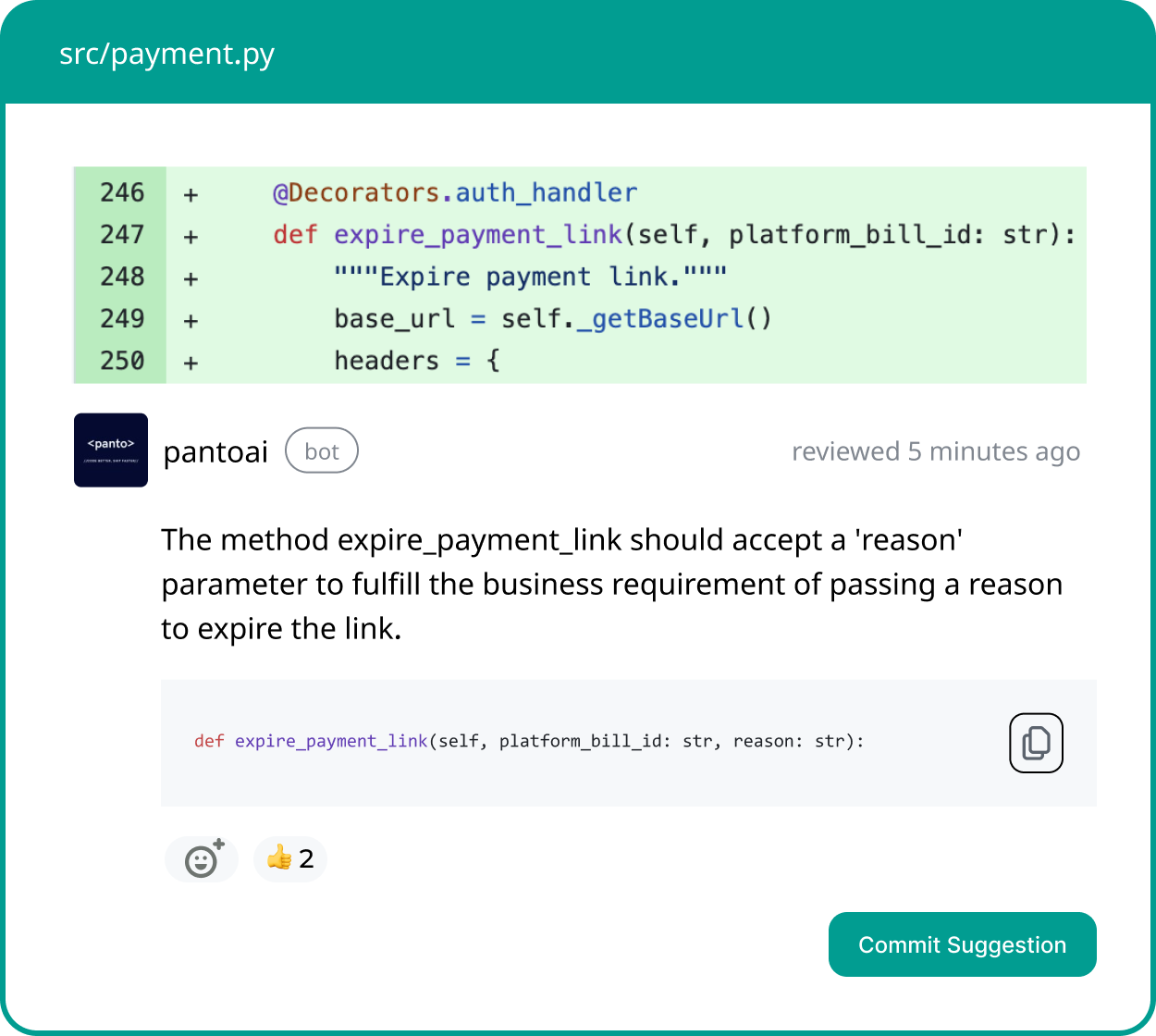

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

Market Size and Growth Forecasts

AI Coding Assistant Market Outlook

Conservative, coding-specific forecasts show steady growth:

- $3.7–3.9B (2024–25)

- $6–6.6B by 2035

- CAGR: ~5–5.5%

Broader AI assistant market forecasts are more aggressive:

- $3.35B (2025) → $21.11B (2030)

- CAGR: ~44%

While coding assistants alone grow more moderately, they remain a durable, expanding segment of the developer tools ecosystem.

Regional Growth Drivers

- Asia-Pacific is the fastest-growing region

- China and India are leading expansion markets

- North America and Europe maintain high absolute adoption

Global Landscape and 2026 Outlook

AI coding assistants are now used by developers in nearly every country:

- JetBrains’ 2025 survey: 24,534 developers across 194 countries

- Adoption rates are surprisingly uniform worldwide

What Changes in 2026

Industry consensus suggests a shift:

- 2025: “Year of AI speed”

- 2026: “Year of AI quality”

Key trends:

- Stronger emphasis on code review and security

- Expansion beyond coding into testing, DevOps, and documentation

- Greater scrutiny of model training data and compliance

AI assistants will remain standard tools—but organizations will become more selective and disciplined in how they use them.

What These AI Coding Assistant Statistics Mean for Engineering Leaders

For decision-makers, the data supports three conclusions:

- Adoption is no longer a differentiator

Not using AI coding assistants is now the outlier. - Productivity gains are real but conditional

Value depends on workflow integration, not just tool access. - Trust and quality determine ROI

Teams that invest in review, governance, and training outperform those that do not.

Final Takeaway

AI coding assistants are firmly embedded in global software development. By 2026, the competitive advantage will not come from adoption alone, but from how effectively teams balance speed, quality, and trust.