Independent data collected from developer surveys, open-source repository telemetry, and workforce research shows that AI-assisted coding tools are now widely used among professional software developers across most major economies.

However, adoption is uneven by country and far more complex than headline statistics suggest. While trial rates approach saturation in some regions, depth of integration, trust, and productivity outcomes vary significantly.

This article presents a consolidated, evidence-driven analysis of AI coding tools adoption statistics by country, covering 2024–2025 with a conservative outlook for 2026.

Methodology

This analysis prioritizes non-vendor, independently verifiable datasets wherever possible.

No single source is treated as authoritative.

1. Data categories used

- Large-scale, multi-country developer surveys

(e.g., Stack Overflow, JetBrains Ecosystem) - Open-source code telemetry

(peer-reviewed analysis of GitHub repositories) - Workforce and macro AI adoption indices

(labor economics and AI diffusion studies) - Security audits and academic evaluations of AI-generated code

2. What is measured vs estimated

Measured directly

- Developer self-reported usage rates

- Daily vs occasional usage frequency

- AI-assisted share of public code contributions

- Reported trust, satisfaction, and risk concerns

Estimated cautiously

- Country-level comparisons where surveys lack coverage

- Enterprise adoption vs individual adoption gaps

- Forward-looking trends for 2026

All estimates are conservative and explicitly contextualized.

3. Defining AI coding tools (scope)

This article focuses on AI-assisted coding tools used by professional developers, including:

- IDE-integrated code completion and suggestion systems

- Natural-language-to-code assistants

- Refactoring, testing, and documentation assistants

Excluded from scope:

- Student-only usage

- Low-code/no-code platforms

- General-purpose chatbots used outside coding workflows

4. Global baseline: adoption among professional developers

Across multiple independent datasets in 2024–2025:

- 76–85% of professional developers report using AI coding tools

- ~50% report daily usage

- 15–20% report no use or active avoidance

This indicates widespread exposure but uneven operational reliance.

5. Trial vs sustained usage

A critical distinction often missed:

- “Have you ever used an AI coding tool?” → near saturation

- “Do you rely on AI tools daily?” → roughly half

This gap explains many contradictions in productivity claims.

AI coding tools adoption statistics by country

Country Comparison Chart of Professional Developers using AI coding tools

| Country | Developer Trial Rate* | Estimated Daily Usage | AI-Assisted Share of New Code** | Enterprise Allowance Level | Key Constraint |

|---|---|---|---|---|---|

| United States | ~99% | ~55% | ~29% | High | Security & code quality |

| India | ~99% | ~50% | ~20% | Medium–High | Review overhead |

| Germany | ~97% | ~45% | ~23% | Medium | Regulation & IP risk |

| France | n/a | ~45–50% | ~24% | Medium | Enterprise governance |

| United Kingdom | ~95–98% | ~50% | ~25% (est.) | High | Trust & compliance |

| Brazil | ~99% | ~45–50% | n/a | Medium | Infrastructure variance |

| China | n/a | ~30–35% | ~12% | Medium | Model access & policy |

| Japan | ~90–95% | ~40% | ~18% (est.) | Medium | Language/model fit |

| South Korea | ~90–95% | ~45% | ~20% (est.) | Medium | Enterprise caution |

| Global Average | ~76–85% | ~50% | n/a | Mixed | Trust & security |

Footnotes

* Developer Trial Rate

Percentage of professional developers who report having used an AI coding tool at least once.

Derived from multi-country independent developer surveys (Stack Overflow, JetBrains).

** AI-Assisted Share of New Code

Percentage of newly committed public-source code inferred to be AI-generated or AI-assisted.

Derived from peer-reviewed open-source repository telemetry (Python repositories).

Why survey and telemetry differ

Surveys measure self-reported behavior.

Telemetry measures observable output.

Countries often show high survey usage but lower code-share integration.

Regional patterns and drivers

United States

The US leads on most adoption metrics.

Key drivers:

- Early access to AI coding tools

- High cloud and IDE penetration

- Strong enterprise permissiveness

Nearly one-third of new public Python code shows AI assistance.

India

India shows near-universal trial adoption.

Drivers include:

- Large, young developer workforce

- Strong outsourcing and export incentives

- Rapid uptake of productivity and automation tools

However, deeper integration still lags the US slightly.

Western Europe (Germany, France)

Adoption is strong but cautious.

Characteristics:

- High individual usage

- Lower enterprise encouragement

- Strong regulatory and compliance concerns

Germany illustrates the “high use, low trust” pattern.

China

China lags on observable AI code share.

Key constraints:

- Restricted access to Western LLMs

- Regulatory scrutiny of AI outputs

- Reliance on emerging domestic models

Adoption is growing, but uneven.

Usage intensity and workflow integration

Daily vs occasional users

Independent surveys show:

- ~50% daily users

- ~25% weekly or occasional

- ~25% minimal or no use

This distribution is consistent across countries.

What developers actually use AI for

Most common use cases:

- Boilerplate code generation

- Syntax completion

- Documentation and comments

- Language translation

Less common:

- Architecture design

- Security-critical code

- Complex business logic

Productivity effects: measured, not claimed

Aggregate productivity impact

Independent academic analysis estimates:

- ~3–5% average productivity gain

- Gains concentrated in:

- Experienced developers

- Well-structured codebases

This is meaningful but not transformative.

Experience-level paradox

Observed pattern:

- Junior developers use AI more

- Senior developers benefit more

This creates uneven productivity distribution within teams.

Negatives and failure modes

Security vulnerabilities

Independent audits consistently find:

- ~45% of AI-generated code contains security flaws

- Frequent issues include:

- SQL injection

- Unsafe deserialization

- Weak cryptography

Manual code review remains essential.

Toolchain attack surfaces

Recent research shows:

- Prompt injection can compromise IDEs

- AI agents may access unintended files

- Malicious dependencies can exploit AI behavior

These risks scale with deeper integration.

Code quality issues

Common failures:

- Incorrect logic with valid syntax

- Hallucinated libraries or APIs

- Misaligned architectural patterns

Developers report time lost validating AI output.

Trust erosion

Survey trends show:

- Declining trust year-over-year

- Fewer than half of developers fully trust AI output

- Increased verification overhead

Adoption outpaces confidence.

Skill atrophy concerns

Developers report concerns about:

- Reduced problem-solving practice

- Shallow understanding of generated code

- Over-dependence on suggestions and summaries

Long-term effects remain unquantified.

Enterprise vs individual adoption gap

Individual behavior

Most developers experiment freely.

They adopt tools opportunistically.

Enterprise governance

Organizations are more cautious.

Barriers include:

- IP leakage concerns

- Compliance requirements

- Auditability of AI outputs

This gap creates shadow usage.

What most articles miss

Adoption is multi-stage

Stages include:

- Trial and experimentation

- Regular individual use

- Developer workflow integration

- Enterprise standardization

Most countries are between stages 2 and 3.

Code share matters more than surveys

Telemetry reveals:

- Real usage intensity

- Structural differences missed by polling

Future research should prioritize output-based metrics.

Local ecosystems distort global comparisons

Non-English and domestic models:

- Change adoption dynamics

- Are underrepresented in Western datasets

Country comparisons must account for this bias.

Adoption ≠ impact

High usage does not guarantee:

- Higher quality

- Faster delivery

- Better security

Outcomes depend on governance and skill.

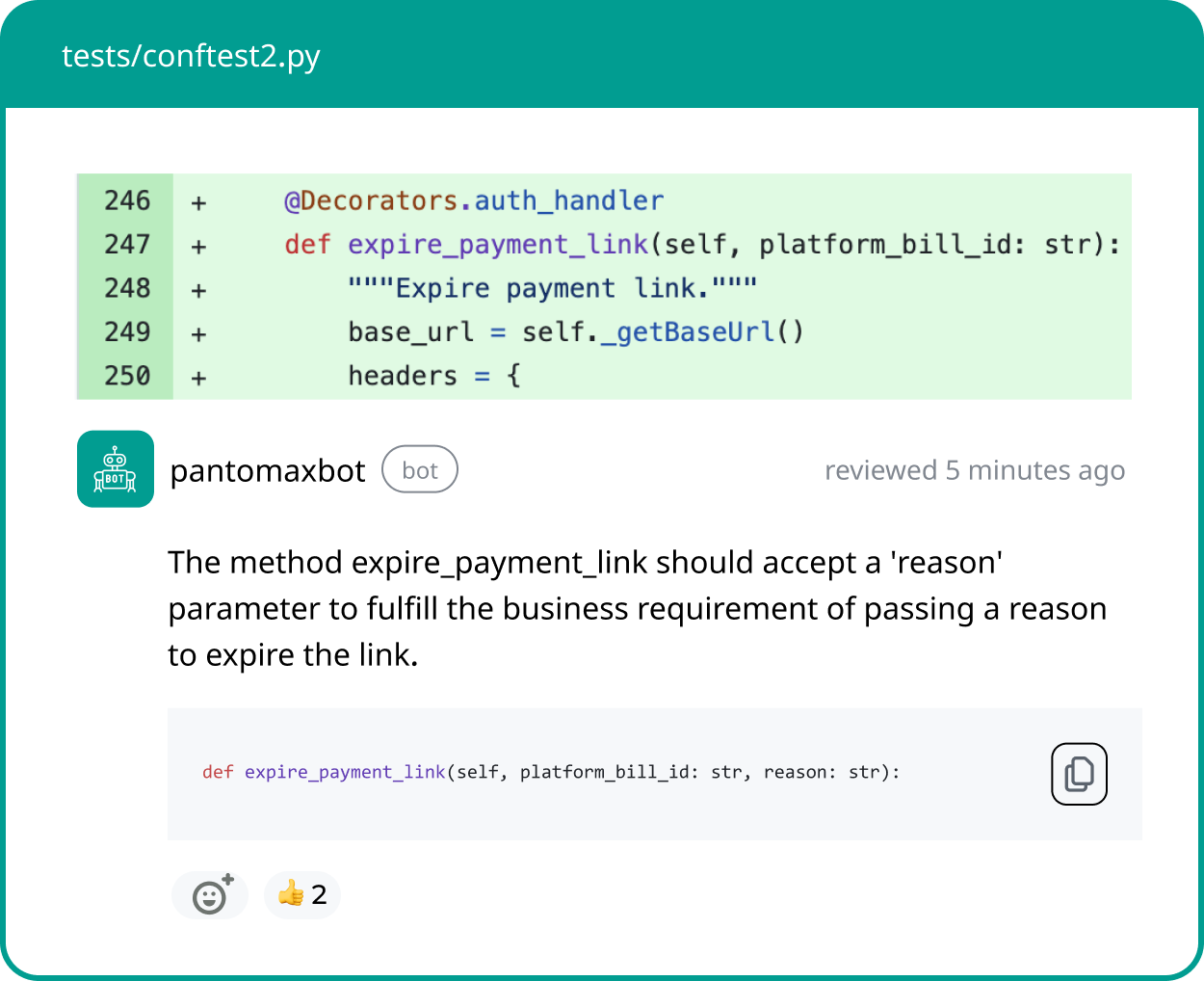

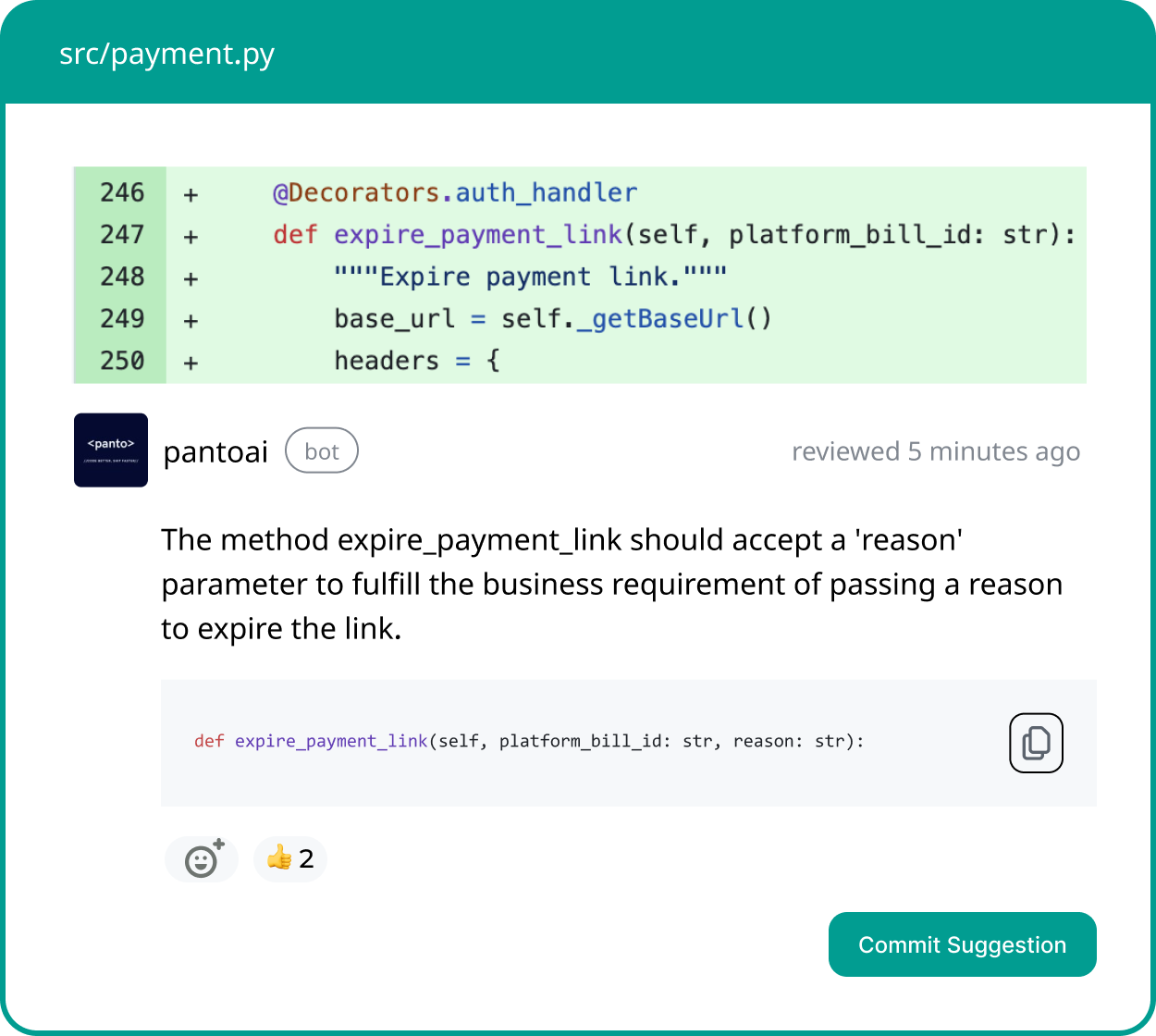

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

2026 outlook (conservative)

Expected trends

- Trial adoption plateaus globally

- Enterprise integration increases gradually

- Security tooling becomes mandatory

No evidence supports exponential gains.

Country-level outlook

- US: marginal growth, deeper integration

- India: continued growth, stronger governance

- Europe: cautious expansion with regulation

- China: moderate catch-up via domestic models

Gaps narrow slowly.

What will not happen

Unlikely by 2026:

- Massive developer displacement

- Autonomous software engineering

- Order-of-magnitude productivity jumps

Evidence does not support these narratives.

Conclusion

According to an independent analysis of AI coding tools adoption statistics, professional developers across countries have largely embraced AI-assisted coding—but not without reservation.

Trial adoption is near saturation, yet trust, security, and integration maturity lag behind usage. Country differences are driven less by technical capability and more by regulation, enterprise policy, and developer demographics.

The defining question for 2026 is no longer whether developers use AI coding tools, but how safely, deeply, and effectively they are embedded into real-world software workflows.