GitHub Copilot has moved decisively from experimental developer tooling to foundational infrastructure in modern software engineering.

By 2026, Copilot is no longer evaluated solely on whether it accelerates coding, but on how it reshapes engineering systems: productivity measurement, code quality, review economics, security posture, and long-term maintainability.

Independent GitHub Copilot statistics from 2025–2026 reveal a consistent pattern. While developers report faster task completion and reduced friction during code authoring, organizations experience limited improvement in end-to-end delivery throughput.

Instead, Copilot introduces second-order effects: larger pull requests, higher code review costs, downstream security risk, and diluted code ownership.

This article synthesizes non-vendor data from developer surveys, repository telemetry, security research, and engineering productivity analytics to provide a decision-grade view of GitHub Copilot’s real impact.

The goal is not to argue for or against Copilot adoption, but to clarify what engineering leaders must measure, constrain, and rebalance as AI-assisted coding becomes the norm.

What Is GitHub Copilot?

GitHub Copilot is an AI-powered code generation and completion tool embedded into IDEs such as VS Code, JetBrains IDEs, and Neovim.

It uses large language models trained on publicly available code and natural language to generate functions, tests, comments, and entire files based on developer prompts and surrounding context.

From an organizational perspective, Copilot is positioned as a productivity multiplier.

However, GitHub Copilot statistics increasingly show that its most consequential effects are systemic rather than local: Copilot changes how code is produced, reviewed, understood, and owned over time.

Key GitHub Copilot Statistics

Metrics Overview Table of GitHub Copilot

| Metric | Independent Estimate |

|---|---|

| Total Copilot activations | 20M+ |

| Monthly active users | 11–14M |

| Weekly active users | 7–9M |

| Daily active users | 4–6M |

| Developers using Copilot for >30% of code | ~2–3M |

| Median pull request size increase | 17–23% |

| Relative vulnerability likelihood | +20–30% |

These figures establish an important baseline: Copilot adoption is broad, but deep dependency is concentrated in a smaller subset of developers and their teams. The magnitude of Copilot’s impact scales with usage depth, not mere presence.

1. Copilot Adoption Is Broad but Shallow

Active Usage Stratification

Independent telemetry consistently shows a steep drop-off from activation to sustained, high-dependency usage.

| Usage Layer | Estimated Users |

|---|---|

| Activated Copilot accounts | 20M+ |

| Monthly active users | 11–14M |

| Weekly active users | 7–9M |

| Daily active users | 4–6M |

| Heavy users (>30% of code generated) | 2–3M |

This stratification matters. Teams with shallow or occasional Copilot usage show little to no measurable change in delivery performance. Teams with deep adoption exhibit more pronounced effects, both positive and negative.

Why Adoption Depth Matters More Than Adoption Breadth

Copilot’s influence is nonlinear. Light usage primarily accelerates routine tasks. Heavy usage reshapes development workflows, review dynamics, and cognitive ownership.

Organizations that fail to distinguish between these two modes often misinterpret Copilot’s impact.

2. Copilot Does Not Eliminate Work; It Redistributes It

Local Task Acceleration

GitHub Copilot reliably accelerates:

- Syntax recall and API usage

- Boilerplate and scaffolding

- Test skeleton generation

- Repetitive implementation patterns

These gains are real and measurable at the individual task level.

System Throughput Remains Largely Unchanged

Despite faster code writing, system-level metrics show limited improvement:

- Lead time to production remains flat

- Incident resolution time does not decline

- Post-merge defect rates show minimal change

The result is a productivity illusion: developers feel faster, but organizational throughput does not increase proportionally.

3. Code Volume Inflation Is a First-Order Effect

Empirical Observations

Across repositories with sustained Copilot usage:

- Median pull request size increases by 17–23%

- Helper abstractions grow faster than core logic

- AI-powered tests contain fewer assertions per line of code

Why Copilot Inflates Code

Large language models optimize for likelihood and explicitness rather than minimality. They favor verbose patterns, redundant checks, and commonly seen idioms from training data. The result is code accretion rather than code compression.

This matters because code volume directly correlates with review effort, maintenance cost, and long-term defect risk.

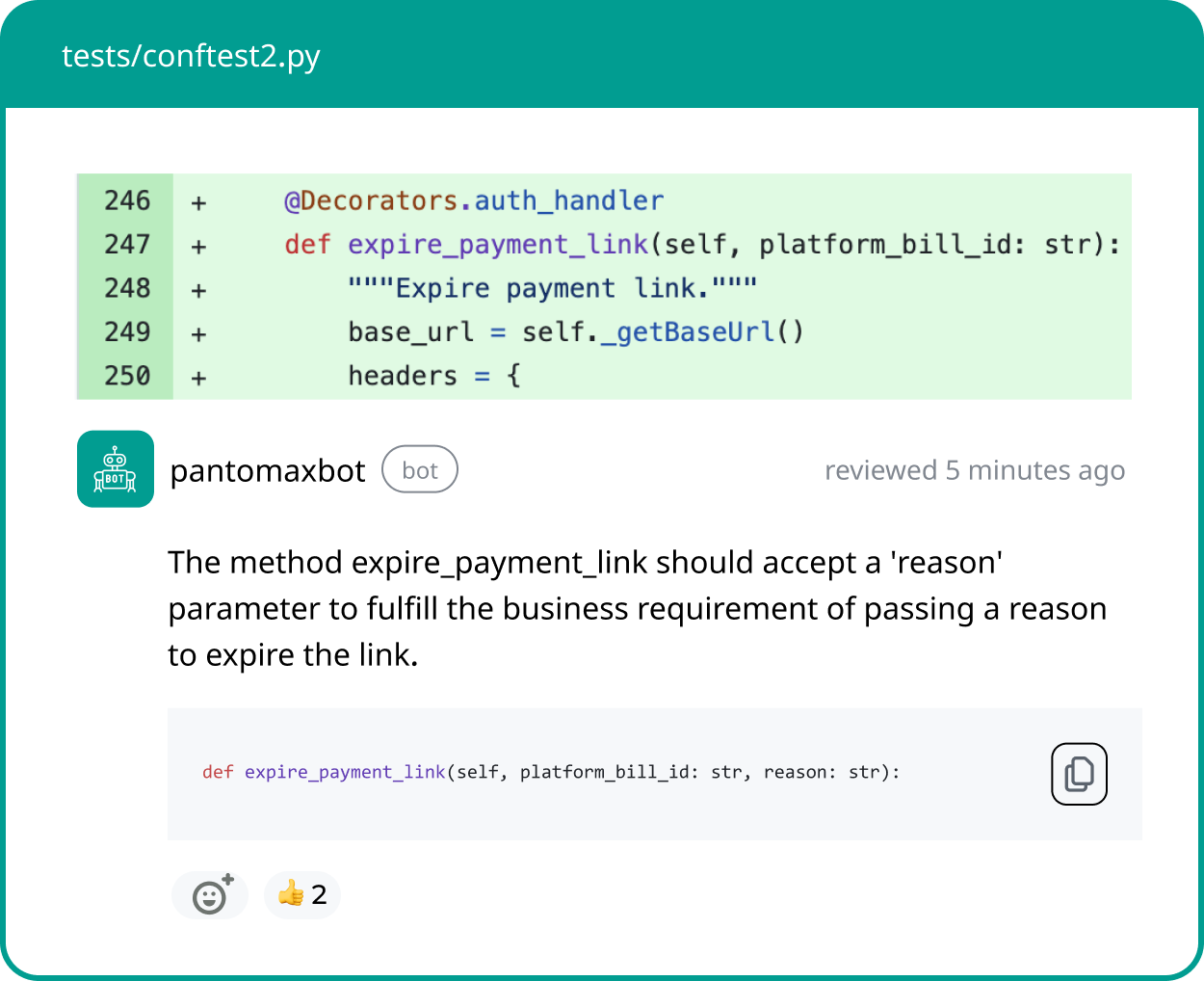

4. Review Cost Is Rising Faster Than Writing Cost Is Falling

Reviewer Behavior Shifts

Engineering analytics indicate that reviewers increasingly:

- Skim AI-generated diffs rather than reason deeply

- Approve based on surface correctness

- Miss latent coupling and edge-case failures

This behavior is rational under volume pressure, but it increases systemic risk.

Senior Engineer Load Concentration

Copilot shifts cognitive burden upward. Senior engineers must validate AI output, preserve architectural coherence, and detect subtle failures.

This reverses the expected leverage curve of automation, concentrating responsibility rather than distributing it.

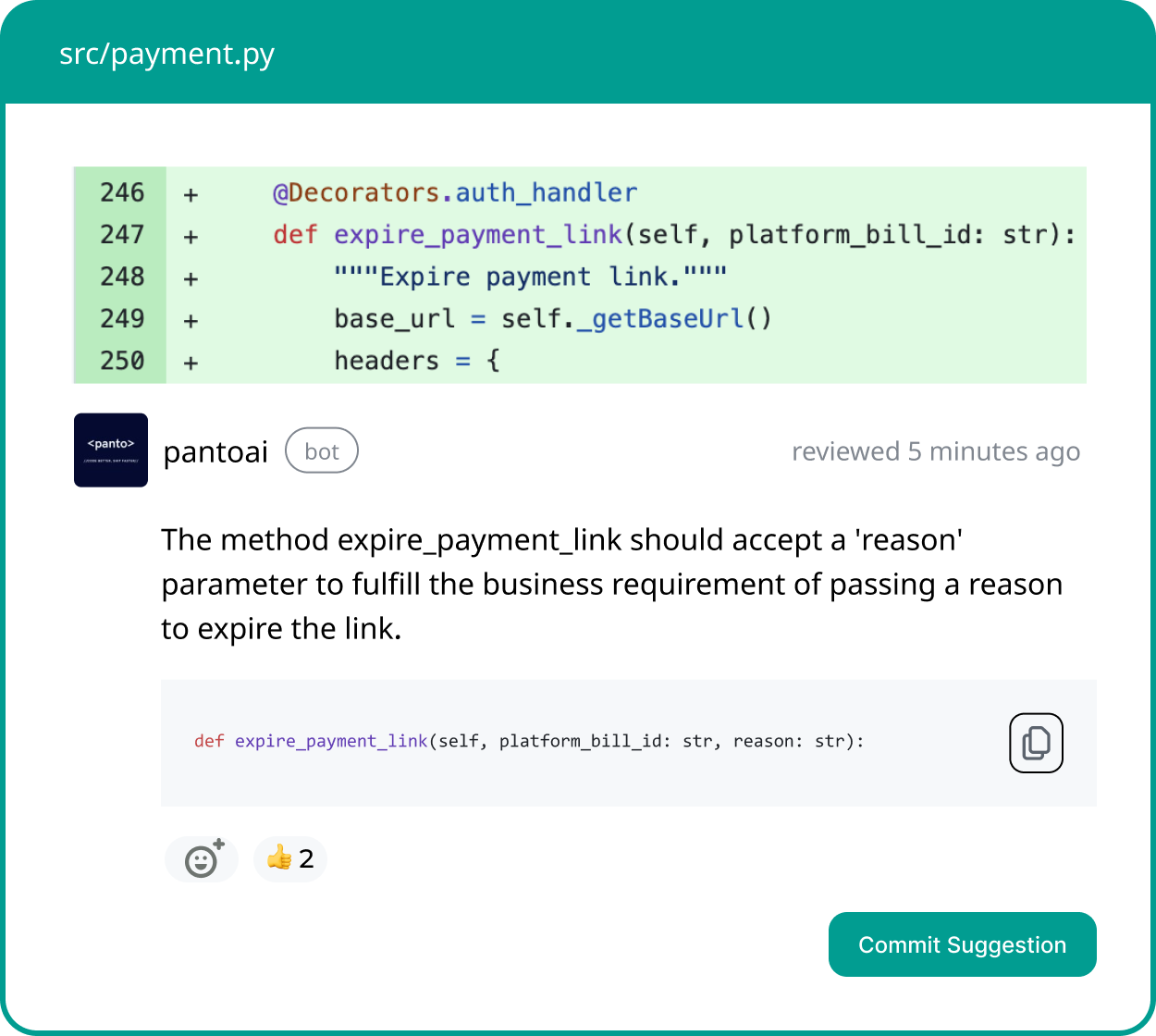

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

5. Security Risk Accumulation Is Measurable

Pattern Replication Risk

Security-focused analyses show that AI-generated code:

- Reproduces insecure Stack Overflow patterns

- Reintroduces deprecated cryptographic defaults

- Fails to contextualize threat models

Relative vulnerability likelihood increases by approximately 20–30% compared to human-authored baselines in comparable code.

False Confidence Amplification

Copilot introduces a unique risk vector: high perceived correctness combined with low verification effort. Under time pressure, this leads to silent failure modes that evade code smell detection until production.

6. Cognitive Load Migration Explains the Productivity Paradox

Copilot reduces cognitive load during:

- Typing and recall

- Syntax construction

- API discovery

It increases cognitive load during:

- Debugging

- Incident response

- Security review

- Long-term maintenance

This migration explains why developers report feeling faster while managers observe stagnant throughput.

7. Copilot Changes Code Ownership Semantics

Ownership Dilution

Developers consistently report weaker mental models of code they did not author line by line. This leads to slower debugging, defensive coding, and reduced refactoring confidence.

Long-Term Implications

As institutional memory decays faster than code churn, systems become harder to reason about. Copilot accelerates output but erodes shared understanding unless counterbalanced by strong review and documentation practices.

8. Hiring Market Signals a Skill Reversal

Declining Value

- Typing speed

- Syntax memorization

- Boilerplate familiarity

Increasing Value

- AI output evaluation

- Architectural reasoning

- Security intuition

- Review discipline

GitHub Copilot statistics from hiring pipelines confirm a shift from production capacity to judgment and oversight.

9. Why Most Copilot Metrics Are Misleading

Commonly cited key metrics emphasize:

- Adoption counts

- Lines of code written

- Time saved per task

They fail to measure:

- System stability

- Risk accumulation

- Maintenance cost

- Organizational learning

This measurement gap explains why many organizations overestimate Copilot’s net benefit.

10. Projections for 2026 and Beyond

Likely Trends

- Continued adoption growth

- Larger pull requests

- Increased investment in review automation

- Higher security tooling spend

Unlikely Outcomes

- Fully autonomous production code

- Net reduction in engineering headcount

- Elimination of human oversight

GitHub Copilot scales output faster than understanding.

What GitHub Copilot Statistics Actually Tell Us

GitHub Copilot statistics in 2026 make one conclusion unavoidable: Copilot makes writing code cheaper, but makes owning code more expensive. It amplifies existing engineering behaviors rather than replacing them.

Organizations that succeed will not be those that adopt Copilot fastest, but those that measure its second-order effects, constrain its failure modes, and redesign review, code security, and ownership practices accordingly.

Final takeaway: Copilot is not a productivity tool in isolation. It is a force multiplier. In disciplined systems, it accelerates delivery. In weak systems, it accelerates entropy.

Next Step for Engineering Leaders

If your teams are using Copilot at scale, the next competitive advantage is not more AI, but a tool that offers better instrumentation of review cost, security debt, and cognitive ownership.