Between 2018 and 2021, there was a strong fintech VC thesis in India. By 2025, every app would be a fintech app. Whether that thesis aged well or not is a discussion for another day.

But we are clearly seeing a similar pattern emerge in AI today.

Every AI company is becoming a code review agent.

From Microsoft to Linear. From Cursor to Cognition. From Slack to Glean. In 2026, almost every serious AI product seems to have a code review agent baked in.

And honestly, it makes sense.

There are very few layers in the developer workflow that consistently add value. Code reviews are one of them. They sit at the intersection of velocity, quality, and risk.

Naturally, everyone wants a piece of that pie.

This post is not about whether AI code review agents are inevitable. That part is already decided. This is about the nuance. How different organizations are approaching the problem, and what we learned building one.

The Original Thesis

It has been a year since we started working on the code review problem at Panto AI.

Our thesis was simple.

More and more AI generated code will go into production. That code is inherently risky. It needs an organization level check before it ships.

The real challenge is scale.

If humans review all of this code, they will soon spend more time reviewing than writing. That defeats the entire promise of AI assisted development.

So the solution could not just be automated. It had to be deeply contextual. Something that understands an organization’s ecosystem, flags what is not ideal, and does it in a fraction of the time with zero human effort.

That is where the first real problem surfaced.

Noise.

The Shift From Writing Reviews to Reading Reviews

The mental model of code reviews has changed.

Earlier, developers spent most of their time writing reviews. Now, they spend most of their time reading them. At the same time, our collective attention span for reading has dropped significantly.

When we launched our first version, one thing became painfully obvious.

Code reviews are deeply subjective.

Different teams have different opinions. Different engineers within the same team have different opinions. Even the same engineer has different opinions depending on context, urgency, and timing.

But one pattern stood out strongly enough for us to double down on.

When a developer consistently found reviews not worth acknowledging, their likelihood of acknowledging even genuinely valuable comments in subsequent reviews dropped sharply.

The cost of an okayish review was not limited to that single PR.

It damaged future reviews.

Noise compounds.

Why Noise Is Harder Than Accuracy

Solving noise is harder than solving correctness.

Accuracy can be optimized statically. Noise cannot.

One size will never fit all. What feels like noise to one team might be non negotiable to another.

We started by categorizing code reviews into two broad buckets.

Comments that typically lead to code changes, and comments that usually do not.

From there, we made the system dynamic. If a certain category of comment was repeatedly ignored earlier, we reduced its prominence or stopped surfacing it altogether.

Then came grading.

Validation issues. Critical bugs. Performance optimizations. Nitpicks.

Based on severity and historical behavior, we decided what should be shown prominently and what should fade into the background.

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

Thumbs Up and Thumbs Down Do Not Work

Most competitors try to learn user affinity through explicit signals like thumbs up and thumbs down.

We tried this early as well.

It failed.

You cannot push developers to do extra work just because your system lacks nuance. In practice, people do not click feedback buttons. Especially in code reviews, where speed matters.

If your product depends on explicit feedback to improve relevance, it is already broken.

Being Native Matters More Than Being Cute

Keeping the interface clean and native is underrated.

Adding poems, jokes, or playful summaries to code reviews might feel like “delight” to a product manager. But the persona here is a developer. Not a kindergarten kid.

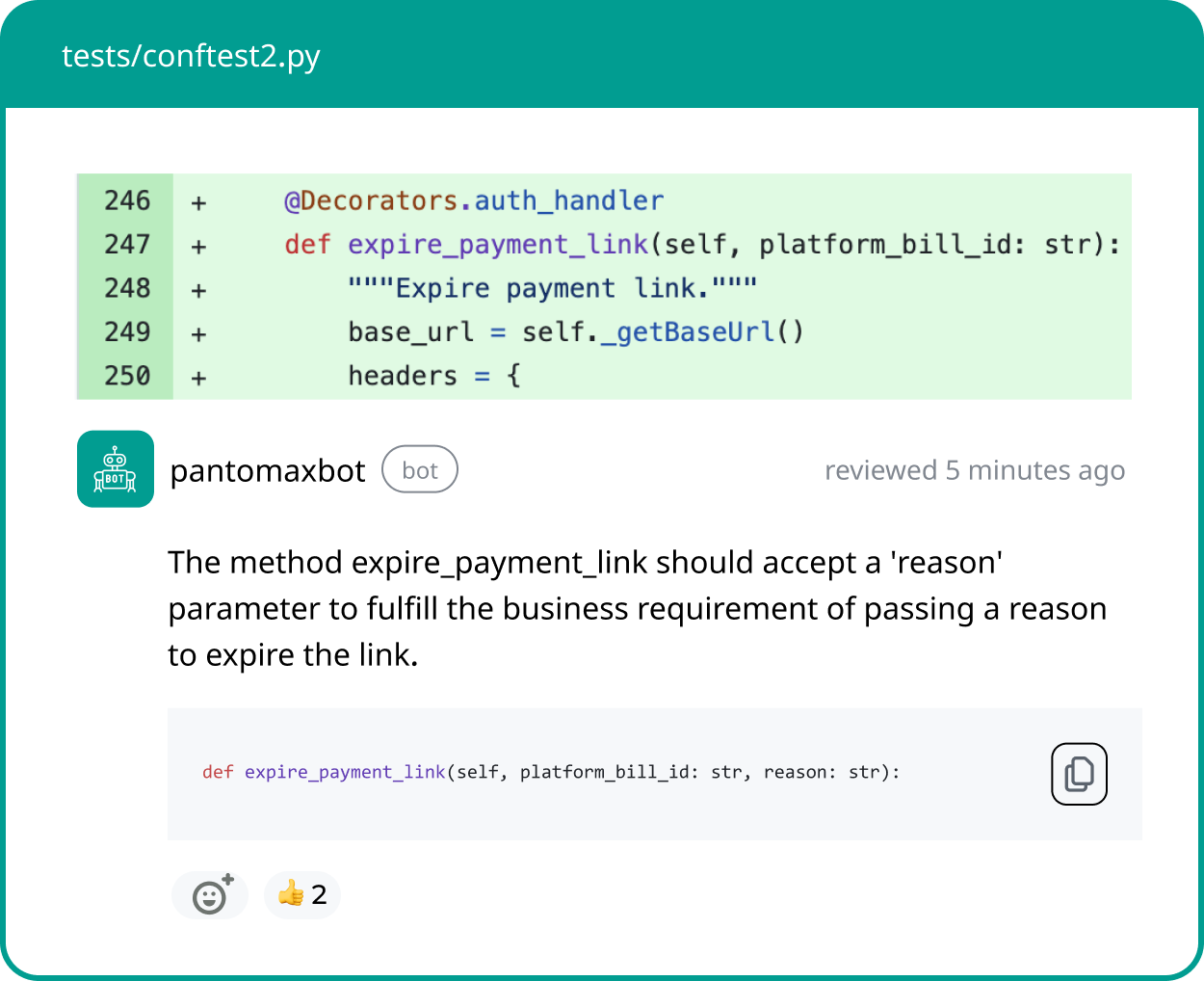

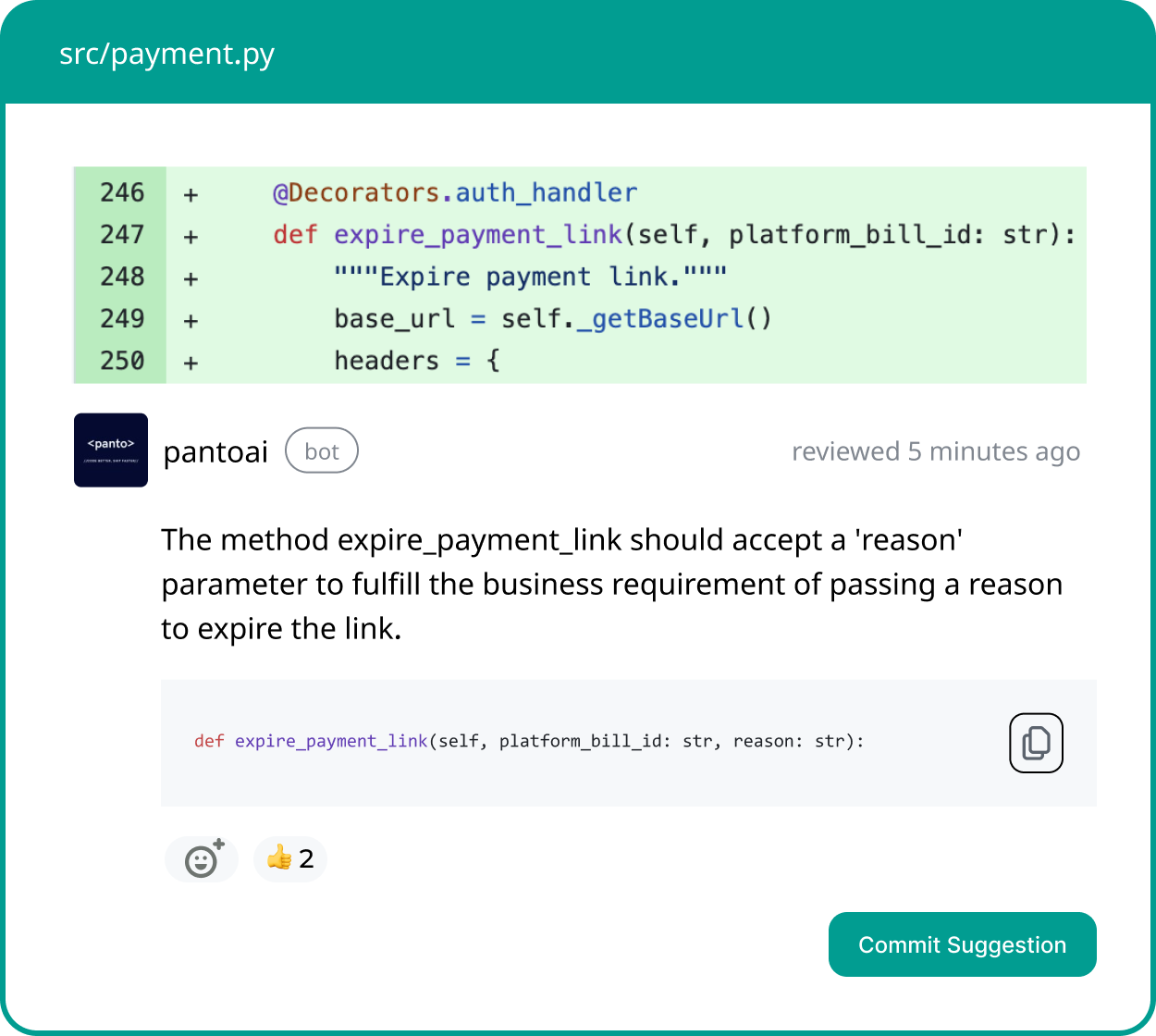

We stayed extremely close to native version control workflows.

We were so obsessed with noise that we only surfaced highly relevant reviews inline as actual code suggestions. Everything else was grouped together, referenced by line number, and pushed to the bottom.

The goal was simple.

Never break flow. Never waste attention.

Our Niche: Signal Over Everything

We built our niche on noise reduction.

High signal to noise ratio became the core of the product. We doubled down on it.

Today, this shows up clearly in retention. It is also one of the strongest metrics during customer evaluations when teams compare us with other tools.

Every player in this space has picked their own niche.

CodeRabbit and Greptile leaned heavily into distribution. The former through performance marketing and ads. The latter through SEO, amplified by the YC halo. Others are still experimenting. Some have already shut down.

RIP Ellipsis.

Where This Is Headed

With new entrants launching almost every day, the moat question applies to everyone.

This market will eventually converge. When it does, it will likely end in a brutal pricing war.

Until then, differentiation lives in the details.

And in AI code review, details mostly look like one thing.

Less noise. More trust.