AI test case generation is the use of reinforcement intelligence to automatically create, maintain, and optimize software test cases from inputs such as requirements, user stories, code changes, and production signals.

AI test case generation uses artificial intelligence—specifically reinforcement intelligence—to automatically create, maintain, and optimize software tests. This approach reduces manual effort, increases coverage, and accelerates feedback in modern CI/CD pipelines. This AI test case generation guide walks you through what it is, how it works, and how to implement it effectively.

Why AI Test Case Generation Matters Now

As software delivery cycles shorten, traditional QA test authoring has become a bottleneck. Manual test case creation struggles with three systemic problems.

- Scale. Test coverage does not grow linearly with product complexity.

- Maintenance. UI and API changes break tests faster than teams can fix them.

- Coverage gaps. Edge cases and negative paths are frequently missed.

AI test case generation addresses these constraints by shifting test creation from manual design to automated inference. Instead of writing tests step by step, teams describe behavior and intent, and AI systems generate executable test suites that evolve with the application.

How AI Test Case Generation Works

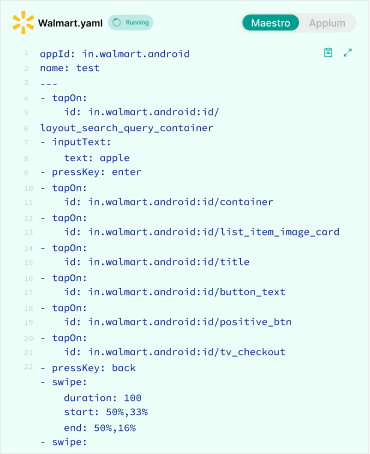

Modern AI testing systems follow a multi stage pipeline designed to translate intent into executable coverage.

Stage 1: Requirements Ingestion and Parsing

AI systems ingest structured and unstructured inputs including requirement documents, user stories, acceptance criteria, API schemas, and sometimes production telemetry.

Natural language processing models extract actors, actions, constraints, and expected outcomes. For example, a statement like “users should see an error for invalid email formats” becomes a structured test intent with trigger, action, and assertion.

Stage 2: Semantic Embeddings and Context Mapping

Parsed inputs are converted into vector embeddings that capture semantic meaning rather than literal text. This allows the system to recognize equivalent scenarios described differently across documents.

Requirements such as “reject weak passwords” and “enforce password strength” are treated as the same underlying behavior.

Stage 3: Scenario Expansion and Path Prediction

Using historical test data and learned patterns, the system predicts likely user paths and failure modes.

This stage generates:

- Positive scenarios

- Negative scenarios

- Boundary conditions

- Security and abuse cases

- Environmental failures

A single login requirement may expand into dozens of scenarios without human enumeration.

Stage 4: Test Case Construction

Scenarios are converted into structured test cases with:

- Preconditions

- Step sequences

- Test data

- Expected outcomes

These are formatted for specific automation frameworks such as Appium, Playwright, or API runners.

Stage 5: Continuous Learning and Maintenance

Post execution feedback informs future test case generation. Tests that consistently fail due to application evolution are adapted automatically, while low value or redundant tests are consolidated.

Self Healing Automation Explained

One of the most impactful capabilities of AI powered testing is self healing automation.

Traditional automation fails when UI identifiers or DOM structures change. AI based systems instead evaluate multiple signals including visual context, semantic labels, and element relationships.

When a locator changes, the system:

- Detects the mismatch

- Searches for a functionally equivalent element

- Updates the test definition

- Records the adaptation for future inference

Teams adopting self healing report maintenance effort reductions of 70 to 85 percent based on operational observations.

Edge Case Discovery Through AI

Edge cases account for a disproportionate number of production failures but are rarely enumerated manually.

AI systems analyze:

- Historical defect patterns

- Production logs

- User behavior anomalies

This enables targeted generation of high risk scenarios such as oversized inputs, race conditions, permission misconfigurations, and delayed responses.

Compared to manual QA, AI driven suites consistently uncover more boundary and negative cases earlier in the lifecycle.

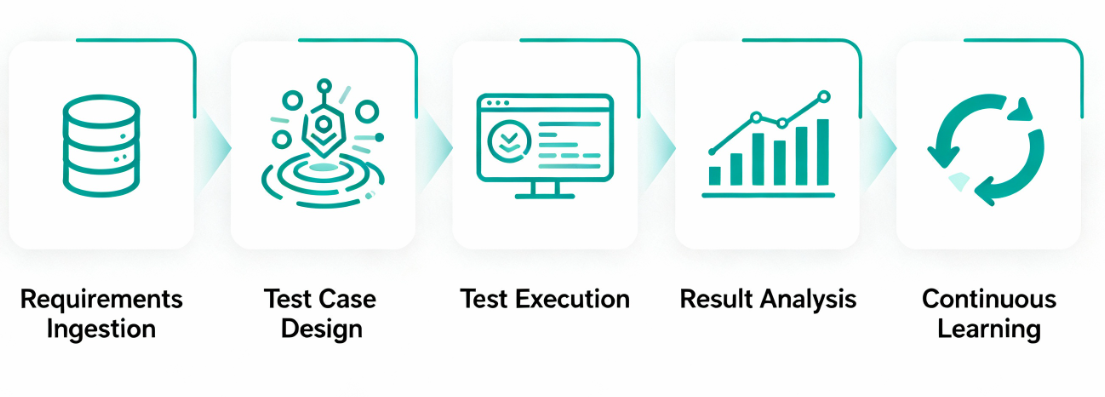

Benefits of AI Test Case Generation

Faster Test Creation

Teams report 40 to 60 percent reductions in time spent authoring tests by converting natural language descriptions directly into executable suites.

Improved Coverage

Systematic generation across positive, negative, and boundary dimensions reduces blind spots.

Lower Maintenance Cost

Self healing and adaptive updates dramatically reduce test breakage caused by routine UI or API changes.

Earlier Defect Detection

Continuous generation and execution surface defects closer to code changes, lowering fix cost and lowering risk.

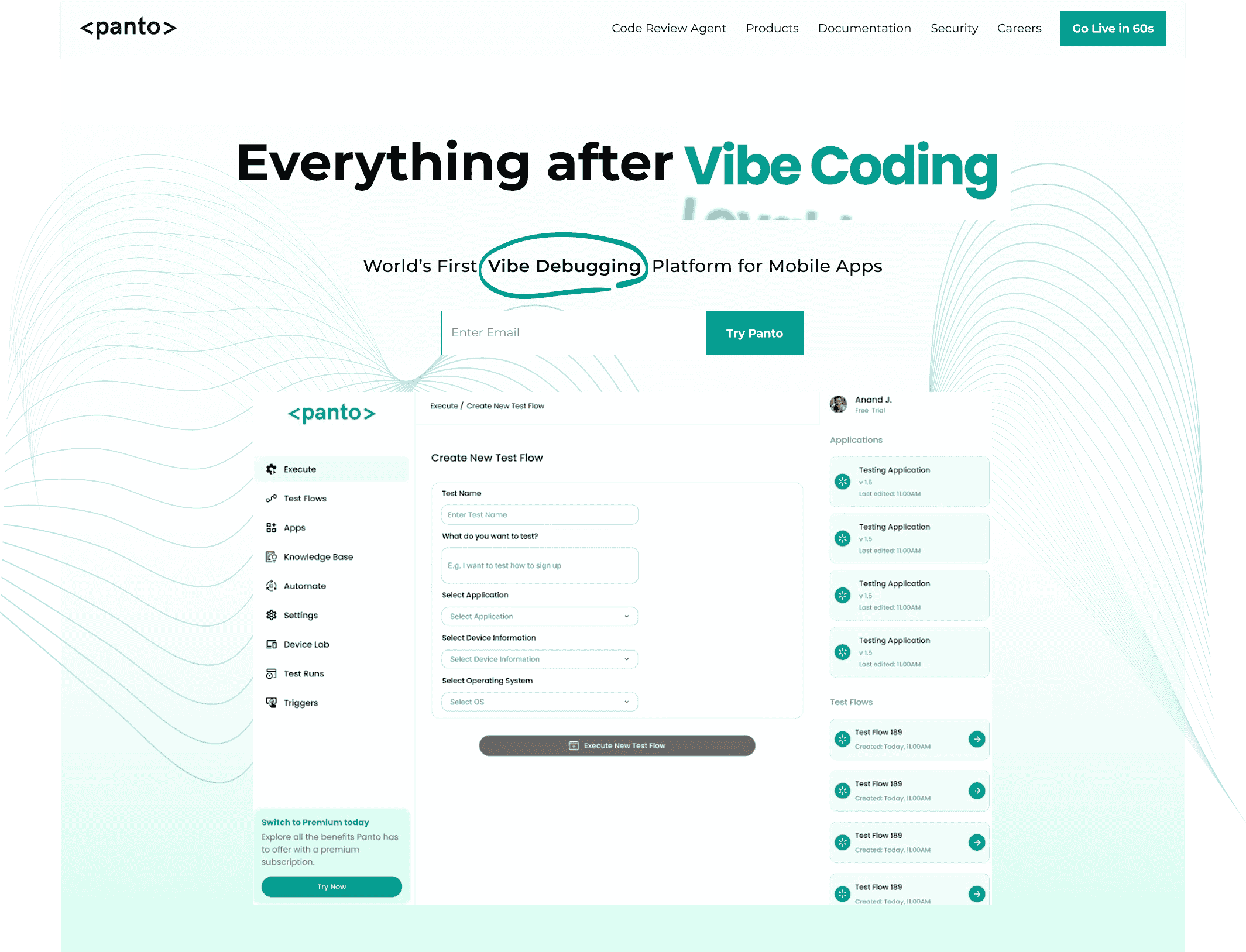

Everything After Vibe Coding

Panto AI helps developers find, explain, and fix bugs faster with AI-assisted QA—reducing downtime and preventing regressions.

- ✓ Explain bugs in natural language

- ✓ Create reproducible test scenarios in minutes

- ✓ Run scripts and track issues with zero AI hallucinations

10 Leading AI Test Case Generation Tools

1. Panto AI

Panto AI focuses on mobile first testing with an emphasis on behavioral debugging. It combines AI driven test generation with dynamic code review signals, enabling tighter feedback loops between development and QA.

Best suited for mobile teams that want testing informed by code context rather than surface level assertions.

2. TestSprite

TestSprite emphasizes autonomous end to end testing and IDE native workflows. Its MCP integration allows tests to be orchestrated directly from developer tools.

Best suited for teams prioritizing autonomous E2E coverage within developer environments.

3. Testomat.io

Testomat.io blends AI assisted test creation with analytics focused on flaky tests and historical failure patterns.

Best suited for teams scaling from manual to automated QA testing with an emphasis on analytics.

4. Katalon Studio

Katalon provides a broad enterprise platform with AI assisted authoring, production signal driven debugging, and visual regression validation.

Best suited for large agile teams requiring end to end tooling.

5. Mabl

Mabl is an AI native testing platform built for CI and CD pipelines. It emphasizes rapid test creation and low maintenance.

Best suited for teams standardizing testing across fast moving release cycles.

6. TestRigor

TestRigor allows tests to be written in plain English without selectors. This reduces technical barriers for non SDET roles.

Best suited for teams lacking automation expertise.

7. Tricentis Tosca

Tosca focuses on model based intelligent testing and vision AI to handle complex enterprise applications.

Best suited for large organizations with heterogeneous systems.

8. ACCELQ

ACCELQ offers browser based, codeless automation across multiple application types.

Best suited for teams prioritizing ease of adoption.

9. Testim

Testim specializes in stable locators and test reuse through AI learning.

Best suited for web and mobile teams focused on reliability.

10. BrowserStack

BrowserStack integrates AI analytics into its large scale device and cross-browser testing infrastructure.

Best suited for organizations operating at significant scale.

AI Test Case Generation Tool Comparison Summary

| Tool | Best For | Key Strength | Maintenance | Learning Curve |

| Panto AI | Mobile QA | Behavioral debugging | Very Low | Easy |

| TestSprite | Autonomous E2E | IDE integration | Low | Easy |

| Testomat.io | Scaling QA | Analytics | Medium | Moderate |

| Katalon | Enterprise teams | Platform breadth | Medium | Moderate |

| Mabl | CI driven teams | AI native | Low | Easy |

How to Choose the Right Tool

- Choose Panto AI if mobile behavior and code context matter.

- Choose TestSprite if autonomy and IDE workflows are primary.

- Choose Tosca or Katalon if enterprise complexity dominates.

- Choose TestRigor or ACCELQ for non technical teams.

Future of AI Test Case Generation

AI testing is moving toward fully autonomous quality engineering. Systems are beginning to design test strategies, prioritize risk, and adapt execution without manual orchestration.

The long term shift is from reactive bug detection to proactive quality assurance driven by intelligence rather than scripts.

Final Takeaways

AI test case generation is no longer experimental. It is becoming a foundational capability for teams shipping software at scale.

The value is not only speed, but resilience, coverage, and learning.

If you are evaluating AI test case generation tools, start by validating how generated tests adapt over time, not how quickly they are created.