Ensuring tests behave the same on a developer’s machine and in a CI pipeline is a perennial challenge. Often a suite runs flawlessly locally but inexplicably fails under CI.

This discrepancy breeds frustration and distrust. In fact, a GitLab survey found that 36% of developers delay releases at least monthly due to test failures.

For QA engineers and leads, identifying and addressing these discrepancies early is crucial to maintaining confidence in automated QA testing. This post explores the key causes of these CI-vs-local issues and outlines best practices to avoid them.

Common Causes of CI vs Local Test Failures

Environment & Configuration Differences

One main reason is environment drift. Local machines and CI runners often differ in OS, tools, and settings. For example, Windows or macOS are case-insensitive with file names, while Linux CI agents are case-sensitive.

A path like ./Config/settings.json might work locally but fail in CI due to letter casing. Keyboard shortcuts also differ (Ctrl vs Cmd). CI jobs typically run in headless mode, and browsers may be different versions or configured differently.

CI pipelines expose these hidden assumptions. Local builds may hide “cached dependencies, implicit configuration, or leftover state that CI environments do not”. In short, CI runs on a clean slate and surfaces anything undeclared.

For instance, an expected environment variable might be present on a local workstation but undefined in CI, immediately causing a test to fail.

- OS Variations: File systems and keys vary by OS (e.g. Linux is case-sensitive, Ctrl vs Cmd on Mac).

- Browser & Mode: CI often uses headless or containerized browsers, which can render and focus elements differently.

- Dependency Versions: Without committed lockfiles, CI may install newer dependencies than your local build, introducing subtle breaks. For example, CI runners often pull fresh package versions; without a lockfile they might fetch an untested update. This can trigger failures only seen on CI.

- Environment Variables: Locally you might load

.envfiles or shell vars; in CI you must explicitly define every needed variable (e.g.DATABASE_URL, API keys). Missing vars cause immediate failures. Failing fast with a descriptive message (e.g. “Env var XYZ is missing”) helps pinpoint the issue immediately.

CI pipelines reveal these hidden differences. In short, anything assumed locally (cached deps, pre-set configs, lingering state) can break a CI build. Ensuring parity (same OS, language versions, tools) mitigates many of these issues.

Test State and Isolation

Tests should be self-contained. If one test leaves data behind, another test may pass locally (assuming that data) but fail in a clean CI environment.

For example, a test that creates a user account will fail later if the CI run starts with an empty database. Reliable tests must be isolated, idempotent, and order-independent. Best practices include resetting any shared state between tests.

- Database and Cache: Initialize or clear test data before each run. Don’t rely on pre-existing records or cache. For example, if a test logs in a user, ensure it creates a fresh account each run.

- File System State: Remove any test-generated files or use unique temp paths so each run starts fresh. Don’t assume a file created in one test still exists in the next.

- External Services: Stub or mock third-party APIs (like email or payment gateways) so CI isn’t blocked by external downtime. This way, your CI runs aren’t affected by services outside your control.

By resetting state between tests, each run becomes predictable. Enforcing isolation often eliminates many CI/local failures – it ensures that running QA tests in isolation (as CI does) yields the same result as a full suite run.

Timing Issues and Flaky Tests

Timing differences can break tests. CI agents, especially on fast Linux hosts, may render pages or run scripts much quicker than a developer’s machine. Tests using fixed sleeps can pass locally but fail in CI if the timing window differs.

Instead, use explicit waits for conditions (e.g. wait until an element is visible). Automation frameworks like Selenium, Cypress, or Playwright offer waiting utilities that adapt to actual load times.

In practice, a test that sometimes passes locally but fails on CI likely has a hidden race condition; robust tests should survive such variability and not rely on specific timing or resource availability.

Flaky tests – those that sometimes pass and sometimes fail without code changes – often surface in CI. Resource contention, parallel execution, or network variability can cause intermittent failures.

Treat CI failures as signals to eliminate flaky patterns: robust tests should survive such variability and not rely on specific timing or resource availability.

Best Practices for Reliable CI Testing

Commit Lockfiles and Pin Dependencies

Use lockfiles (e.g. package-lock.json, yarn.lock, Pipfile.lock) to pin exact dependency versions.

These files “record the exact versions of every dependency,” ensuring the CI install matches your local setup. For example, use npm ci to install exactly from package-lock.json. This prevents surprises from upstream updates.

Consistent dependencies are foundational for stable tests: without them, even a minor library update could break a CI build.

For instance, CI runners often pull fresh package versions; without a lockfile they might fetch an untested update. This can trigger failures only seen on CI.

Align CI and Local Environments

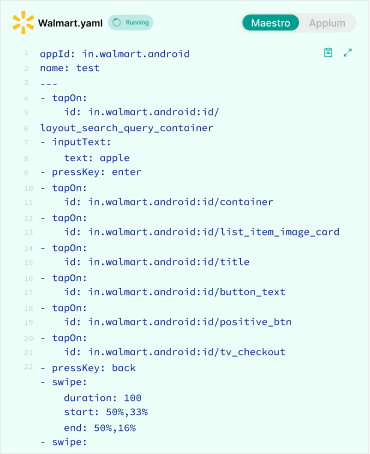

Make local and CI environments as similar as possible. One strategy is to use Docker or containers locally with the same image as CI. Specify the OS, language runtime, and automation tools explicitly.

For UI tests, fix the browser version and window size to match CI’s configuration. Automate WebDriver management so the correct browser driver is always used.

In short, treat the CI setup as the source of truth: if CI uses Linux and Node v16, run your local tests under those conditions too. Many teams even share the Docker image used in CI so everyone tests under the same conditions.

For example, path separators (\\ vs /) or end-of-line conventions between Windows and Linux can cause tests to fail on CI. Ensuring the same language and OS versions locally and on CI prevents these subtle errors.

Configure CI Environment Variables

CI pipelines do not inherit your machine’s shell environment. Explicitly declare all needed variables in the CI config (e.g. pipeline YAML or project settings). This includes database URLs, feature flags, and API credentials.

For example, set NODE_ENV=test or API_KEY in the CI environment. Use the CI’s secure storage for secrets. If a required variable is missing, the build should fail fast with a clear error.

By defining these variables in CI, you ensure tests have the correct configuration to run in any environment. In practice, failing fast with a descriptive message (e.g. “Env var XYZ is missing”) helps pinpoint the issue immediately.

Write Robust, Isolated Tests

Reliable CI pipelines depend on tests that behave consistently, regardless of execution order or environment.

When tests share state, rely on leftover data, or interact unpredictably with external systems, failures appear only in clean CI environments.

Designing fully isolated, self-sufficient tests is therefore essential for long-term automation stability and trust in CI results.

- Each test should set up and clean up its own data.

- If a test requires a user account, it should create the account during setup and delete it during teardown.

- Use setup/teardown hooks to reset shared state, such as truncating database tables or clearing caches.

- Avoid inter-test dependencies—tests must not rely on data created by previous tests.

- In parallel CI execution, isolated tests prevent conflicts over shared resources.

- When external systems are required (e.g., email or payment services), use mocks or dummy endpoints to avoid failures caused by third-party outages.

- Generate randomized or timestamped test data to prevent collisions when tests run simultaneously.

- Some frameworks support transactions or automatic rollbacks, ensuring the database is clean after each test run.

Use Explicit Waits and Avoid Fragile Patterns

Replace fixed timeouts with condition-based waits. For example, wait until a button is clickable rather than sleeping for a set time.

Frameworks like Playwright or Cypress provide auto-waiting for elements by default. Also disable or wait out any animations and loading spinners.

For example, Cypress’s built-in retry logic re-runs commands until they pass, often eliminating the need for manual waits. These changes drastically reduce timing-related failures and make tests more stable across environments.

Monitor Test Stability with Metrics

Track test stability over time using QA metrics. Important metrics include Flaky Rate (percentage of tests that fail on the first attempt but pass on retry) and Pass Rate Trend (overall suite success rate over time).

For instance, a rising Flaky Rate indicates growing instability. Maintain a dashboard or CI report to visualize these trends. You might log each test’s failures and calculate how often it needs retries.

Measure metrics like failure rate and stability score to quantify flakiness. One approach is to log each test’s failures; for example, track how often it passes on the second try.

| Metric | What it measures | Why it matters |

|---|---|---|

| Flaky Rate | % of tests that fail initially but pass on retry | High values signal many unstable tests. |

| Pass Rate Trend | Daily success percentage of the entire suite | Shows if test reliability is improving or degrading. |

| Error Variety | Number of unique failure messages per test | Many unique errors suggest nondeterministic failures. |

| Average Execution Time | Mean duration of the full test suite | Spikes can indicate environment or performance issues. |

Maintaining these metrics helps QA leads detect problems early and ensure the suite’s reliability. Teams may set thresholds (e.g. Flaky Rate > 1%) to automatically flag issues.

By focusing on the most unstable tests (via error logs or metrics), you can prioritize fixes and gradually improve overall confidence.

Reproduce CI Locally for Debugging

Failures that appear only in CI can feel unpredictable, but they are often reproducible with the right approach.

The key is to replicate the CI environment locally so differences in OS, dependencies, or configuration become visible. Once parity is achieved, debugging becomes faster, clearer, and far more reliable.

- Run tests inside the same Docker container or VM image used in the CI pipeline.

- Clear local caches before execution (e.g., run

npm ci, deletenode_modules, remove build artifacts). - Execute tests in full isolation to match CI’s clean environment behavior.

- Use CI features like SSH access to the build environment for real-time debugging when available.

- Ensure local and CI setups match in OS, dependency versions, and configuration variables.

- Recreate CI steps locally using docker-compose or CI-provided local CLI tools to reproduce failures quickly.

Roles and Responsibilities

- QA Engineers/SDETs: Diagnose and fix flaky tests. Use CI logs, screenshots, and stability metrics to identify root causes (timing issues, leftover state, etc.). Incorporate explicit waits and cleanup logic in the automation code.

- QA Leads/Managers: Set team standards and CI practices. Require locked dependencies and documented CI configs. Monitor test stability metrics and address systemic problems. Ensure CI infrastructure is consistent (e.g. shared container images, secret management).

- Junior QA Developers: Adopt these best practices from day one. When writing tests, consider CI conditions (clean environment, parallel runs). Pair with senior engineers to learn how to avoid CI-specific issues.

For QA teams, every passing CI build is a victory, and every failure is a prompt to refine the suite further. By following these practices, QA teams can turn CI from a mystery into a vital feedback tool.

In summary, automated tests often pass locally but fail in CI because CI reveals hidden flaws in dependencies, configuration, or test logic. Differences in environments, missing lockfiles, leftover state, and timing issues all play a part.

The solution is to fix the root causes: commit lockfiles, align and document environments, configure CI properly, write isolated tests, and use robust waiting strategies.

Embrace CI feedback to strengthen your tests and ensure your automation is trustworthy. Ultimately, a green CI build should be a hallmark of a robust test suite – if “your test only works on your machine,” it does not really work.