Vibe coding is a neat party trick. Type a prompt, watch an app appear, push a build to TestFlight, and call it shipped. The problem is what “shipped” actually means. In the real world, shipped apps get tapped, swiped, and cursed at.

Mobile apps run on 2016 hardware, on custom OEM skins, behind flaky keyboards, and under inconsistent permission dialogs. The magical demo works. The production user tears it apart.

This is the core problem with vibe coding today: it optimizes for speed of creation, not reliability in production. If you want a professional mobile app, prototypes do not matter. Real device QA does. No debate.

The ugly facts

Crash monitoring and release stability are not optional. If you do not test broadly across devices you will only learn about your failures after users do.

Firebase Crashlytics is how modern mobile engineers catch catastrophic regressions early. Use it. It will tell you your crash-free metrics, and those numbers do not care about clever prompts.

Device fragmentation is not a theory. It is the reason your “works on my phone” QA strategy is a death sentence.

Industry data and testing vendors show teams need broad platform coverage to avoid surprising failures on real devices. BrowserStack lays out the scale of device variability and why emulators alone do not cut it.

Users are brutal. Nearly half of installs end in an uninstall within weeks. That is not opinion. It is the market punishing broken first impressions.

If your flow fails on older Android versions or misplaces a button on a smaller screen, users do not file a polite bug report. They delete the app and move on.

Use uninstall benchmarks to understand this cost. AppsFlyer documents uninstall rates that make shipping broken builds an expensive decision.

Low-code automation and AI assisted development are exploding. That means many more apps will be created than before. That is great for iteration. It is horrible if founders assume the generator handled QA.

The market growth numbers are screaming more volume, not more device testing. Forrester shows adoption trends for low-code that explain why this problem is about to get a lot worse.

If you think running fuzzy AI agents across devices will fix this, think again. Industry reporting from continuous debugging vendors shows the winning teams combine emulators and real devices, and they treat device coverage as a metric to manage not a thing to hope for.

Sauce Labs customers test across dozens of devices on average, because that is what reveals real faults before customers do.

The core truth, said plainly

Vibe coding creates surfaces. It does not create product reliability. The gap between surface and product is QA. Specifically, real device QA. If you are fine with demos, ship demos. If you want users to keep your app, you need deterministic verification on real phones.

Here are the exact failures I see in the wild, and why they happen

- Buttons that work in the preview but ignore taps on older Android builds. That is an input handling mismatch between runtimes.

- Layouts that collapse on low DPI or odd aspect ratios. That is a selector or constraint failure that only shows up on certain screens.

- Auth or permission flows that hang because a system dialog behaved differently than the emulator. That is an OS edge case.

- Race conditions where network, UI rendering, and animations collide on slower hardware. That is a timing bug you will not catch in a warm demo.

None of this is glamorous. All of it is fixable with device-level debugging that is deterministic and fast.

The real solution, real device QA, automated

Stop thinking about “agent exploring the app” as the answer. Stop hiring more people to manually tap every flow. The pragmatic path is simple and brutal.

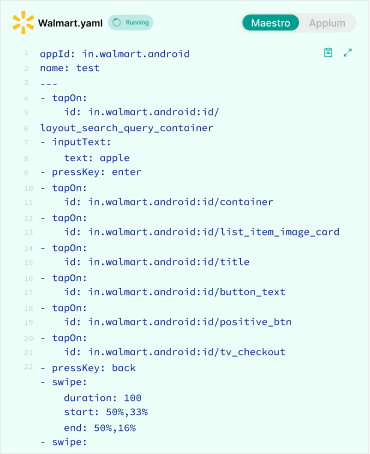

- Auto-generate deterministic Appium flows from the UI model the builder already has. If your platform can render a preview, it can map accessibility nodes and probable flows. Use that data to seed scripts.

- Run those scripts across a curated device farm that covers the top devices and a tail of older OS versions. Emulators first, then physical devices for the final gate. Measure KPIs like platform coverage.

- Capture structured diagnostics on failure: crash dumps, selector failures, screenshot diffs, stack traces, and logs. A vague “it failed” report is useless. Diagnostics let you blame the code not the user.

- Feed deterministic failure classes into a tiny repair pipeline that generates suggested repository changes, PRs, and tests.

- Push a PR with the failing screenshot, failing selector, and the minimal code change. Human reviews, clicks merge, rerun. Promote build. Repeat.

This is not rocket science. It is operational discipline. It is productized, real device QA.

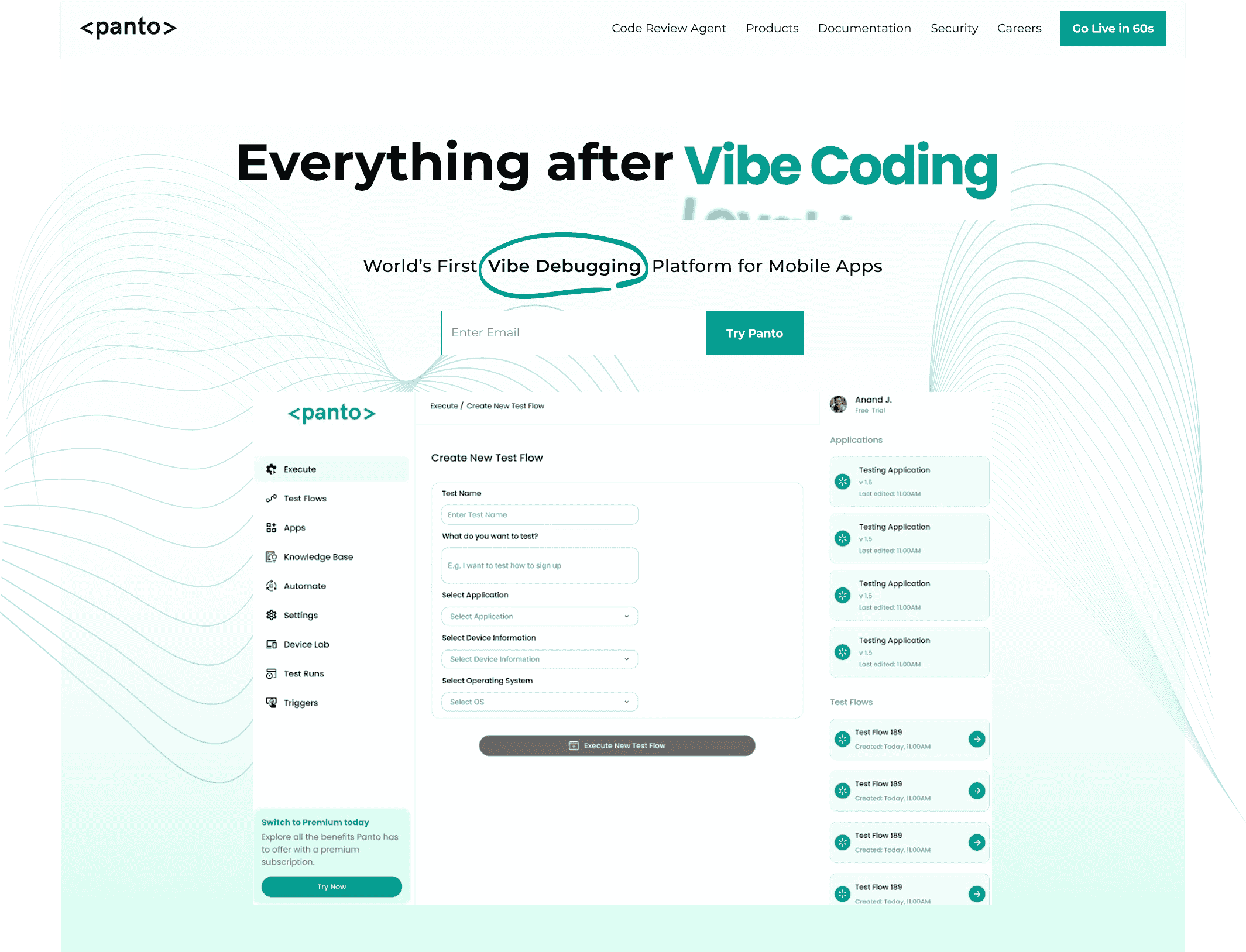

Everything After Vibe Coding

Panto AI helps developers find, explain, and fix bugs faster with AI-assisted QA—reducing downtime and preventing regressions.

- ✓ Explain bugs in natural language

- ✓ Create reproducible test scenarios in minutes

- ✓ Run scripts and track issues with zero AI hallucinations

The product opportunity everyone is ignoring

The next big infrastructure play in mobile is not another generator. It is the invisible QA layer that makes generated apps reliable.

Auto-generate Appium flows. Auto-run them on device farms. Auto-translate failures into PRs. One click to fix. One click to rerun. Ship confidently.

We at Panto AI are solving for “Everything after Vibe Coding”. If this resonates with you, reach out!