AI code review tools are rapidly replacing manual, checklist-style pull request reviews. Instead of relying solely on human reviewers, teams now use AI agents to analyze diffs, detect logic errors, flag security issues, and even generate refactoring suggestions automatically.

Two of the most discussed tools in this space are Bugbot by Cursor and CodeRabbit.

But which one actually improves code quality? Which produces fewer false positives? And which delivers the best ROI for engineering teams?

This guide provides a detailed, data-informed comparison between Bugbot vs CodeRabbit across accuracy, speed, integrations, false-positive rates, workflow fit, and real-world impact.

TL;DR — Bugbot vs CodeRabbit

| If your priority is… | Choose… |

|---|---|

| Maximum precision with minimal noise | Bugbot |

| Context-rich PR understanding and collaboration | CodeRabbit |

| Cross-repo intelligence and long-term maintainability insight | Panto AI |

At a strategic level:

- Bugbot is optimized for high-confidence defect detection.

- CodeRabbit is optimized for broad contextual review and team enablement.

The correct choice depends on whether your bottleneck is escaped bugs or review velocity.

The Rise of AI Code Review in 2026

To understand this comparison, it helps to zoom out. Three forces are driving rapid adoption of AI code review:

1. Explosive Code Volume from AI Generation

LLM-assisted coding has fundamentally changed the speed and scale at which software is produced.

Developers are now able to generate large functional blocks of code in seconds, which has led to sharp increases in:

- Lines of code written per developer

- Pull request frequency and size

- Overall surface area where hidden defects can exist

While this acceleration improves productivity, it also creates a new risk: code review capacity has not scaled at the same pace as code generation.

Human reviewers still operate under the same cognitive limits and time constraints, meaning more code is being merged with less proportional scrutiny.

AI code review emerges here as a necessary balancing force—helping teams maintain quality even as development velocity rises.

2. Escaped Defects Are Getting More Expensive

The nature of production failures has also evolved. Modern outages are rarely caused by simple syntax errors; instead, they often stem from complex system interactions such as:

- Distributed system coordination failures

- Subtle race conditions or concurrency issues

- Security misconfigurations across services or environments

- Incorrect assumptions between microservices or APIs

These categories of bugs are particularly costly because they are:

- Hard to reproduce

- Difficult to detect in manual review

- Capable of causing large-scale incidents or data exposure

AI reasoning models are increasingly effective at identifying these non-obvious logical and architectural risks early in the pull request stage—before they become expensive production problems.

3. Review Quality Variability

Traditional manual code review is inherently inconsistent. The quality of feedback can vary significantly depending on:

- The reviewer’s experience level

- Available time and attention during the review

- Familiarity with the specific domain or codebase

Even strong engineering teams experience fluctuations in review depth due to deadlines, context switching, or reviewer fatigue.

AI review tools introduce a different dynamic: they provide consistent baseline scrutiny across every pull request, regardless of timing or reviewer availability.

Rather than replacing human judgment, AI establishes a reliable first layer of analysis, allowing human reviewers to focus on:

- Architectural decisions

- Product logic

- Long-term maintainability

Together, these structural shifts—faster code generation, higher defect cost, and inconsistent human review capacity—explain why the Bugbot vs CodeRabbit comparison is not just a tooling discussion, but a reflection of how software quality itself is evolving in 2026.

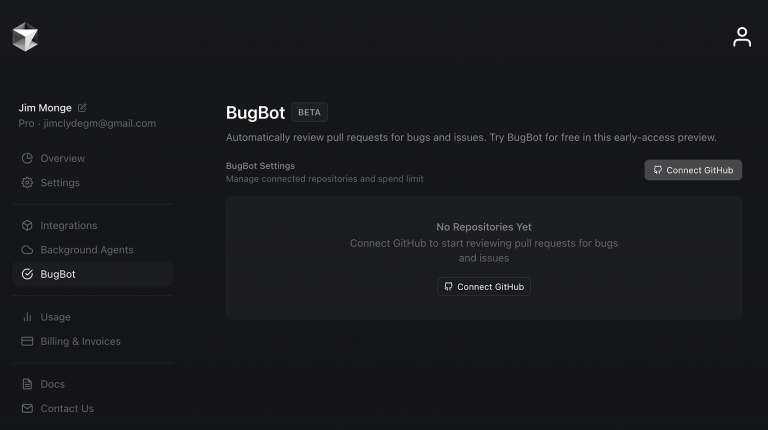

What Is Bugbot?

Bugbot Overview

Bugbot is an AI code review agent deeply embedded in the Cursor development environment, designed to operate as a seamless extension of the developer workflow rather than a separate external tool.

Because it lives close to where code is written and reviewed, it can analyze changes in near real time and surface issues before they reach later stages of the delivery pipeline.

It runs automatically on pull request diffs and focuses narrowly on production-relevant defects, such as:

- Logic and control-flow errors

- Security vulnerabilities

- Null pointer and crash risks

- Missing edge-case validation

- Silent behavioral inconsistencies

Unlike many AI tools, Bugbot deliberately avoids commenting on style, formatting, or low-severity concerns, ensuring that developer attention is reserved only for issues that meaningfully impact correctness, reliability, or security.

Core Philosophy: High Signal, Low Noise

Bugbot is built around a simple premise: developers ignore tools that cry wolf. Over time, engineering teams exposed to excessive warnings or low-value feedback begin to distrust automated review systems, reducing both adoption and effectiveness.

Traditional static analysis often produces:

- Large volumes of warnings

- Low fix acceptance rates

- Reviewer fatigue

Bugbot instead prioritizes:

- Precision over coverage

- Trust over verbosity

- Real defects over theoretical risks

Functionally, it acts as a pre-merge safety net for high-impact failures, helping teams reduce escaped defects without overwhelming reviewers with unnecessary noise.

Reported Usage Metrics

Public figures associated with Bugbot usage provide directional insight into how the tool performs in real engineering environments, particularly at scale across diverse repositories and teams.

While individual results naturally vary based on code quality, testing maturity, and review culture, these metrics help illustrate Bugbot’s intended precision-first positioning.

Reported indicators include:

- 1M+ pull requests analyzed

- 1.5M issues flagged

- ~50% of flagged issues fixed before merge

A ~50% remediation rate is unusually high in static analysis contexts, where:

- Many tools see <10% fix rates

- Developers frequently suppress or ignore warnings

This pattern suggests strong true-positive precision and developer trust, though real-world effectiveness still depends on each organization’s codebase maturity and workflow discipline.

Limitations of Bugbot

No tool is universally optimal, and Bugbot’s precision-focused design introduces trade-offs that are important to understand before adoption.

These limitations are not necessarily weaknesses, but rather reflections of deliberate product prioritization around signal quality and deep IDE integration.

Key constraints include:

- Tight coupling to the Cursor ecosystem

- Lack of PR summaries or conversational AI chat

- Premium pricing tier relative to competitors

These trade-offs position Bugbot best for:

- Security-sensitive environments

- Reliability-critical backend systems

- Teams prioritizing defect prevention over collaboration UX

What Is CodeRabbit?

CodeRabbit Overview

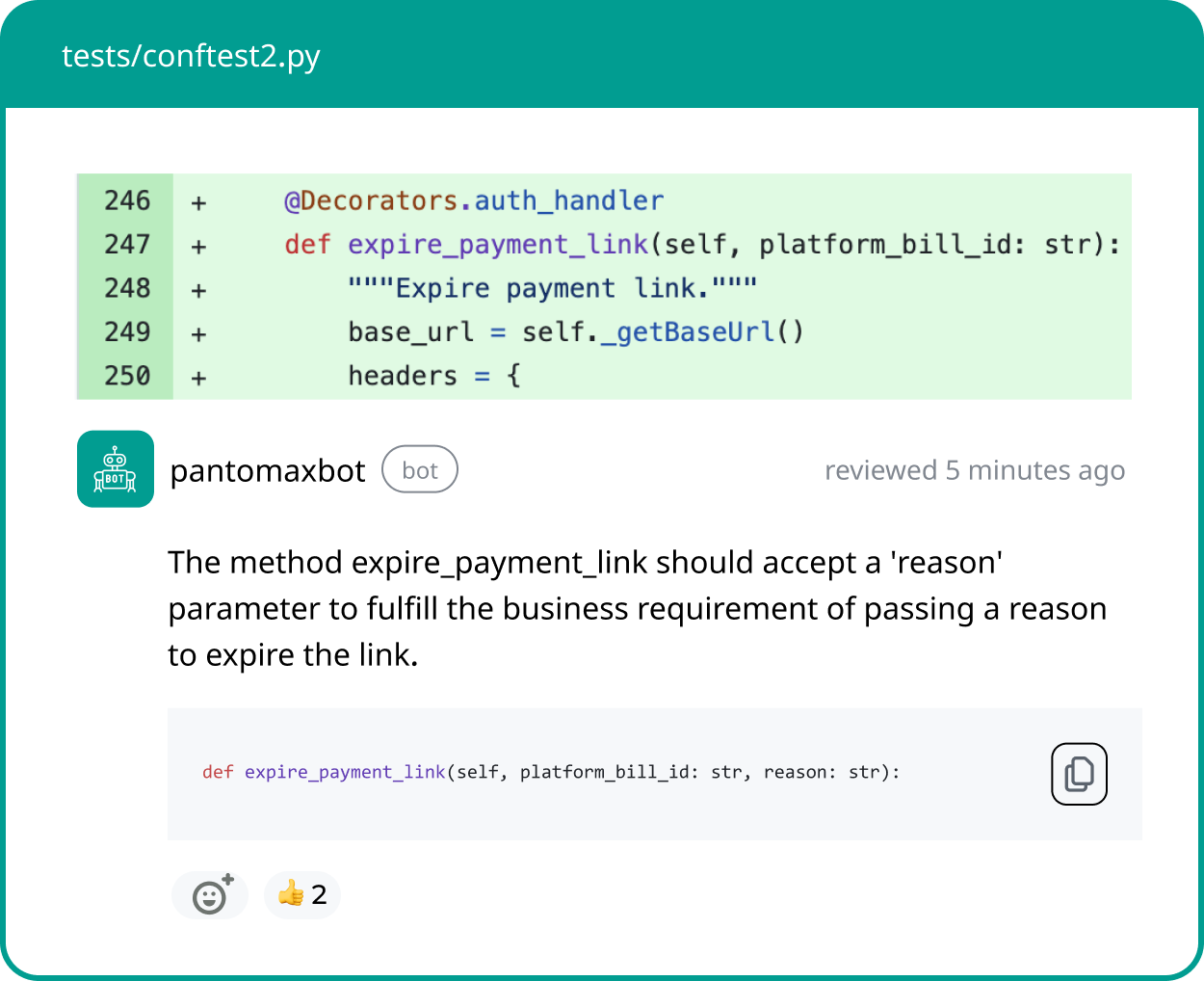

CodeRabbit is an AI pull request assistant designed for broad ecosystem compatibility and collaborative code understanding, positioning itself less as a narrow defect detector and more as an always-available engineering reviewer. It integrates across GitHub, GitLab, BitBucket, Azure DevOps, VS Code and CLI workflows.

Rather than living inside a single IDE or workflow, it is built to operate across repositories, platforms, and development environments, making it suitable for teams with diverse tooling and distributed collaboration patterns.

Its capabilities extend beyond bug detection to include:

- Natural-language PR summaries

- Architecture explanations

- Sequence diagrams

- Interactive Q&A on pull requests

- Suggested fixes and automated test generation

- Integration with dozens of static analysis tools

Together, these features position CodeRabbit as a tool focused not only on finding defects, but also on improving shared understanding and accelerating the overall review process.

Core Philosophy: Context-Aware Collaboration

If Bugbot behaves like a precision security reviewer, CodeRabbit behaves more like a staff engineer carefully explaining every change and its implications.

This philosophical difference shapes how feedback is generated and presented. Rather than aggressively filtering for only the highest-severity defects, CodeRabbit aims to provide contextual insight that helps teams reason about code quality, architecture, and maintainability as a whole.

Its priorities include:

- Shared team understanding

- Faster onboarding for new contributors

- Cross-repository and cross-file reasoning

- Measurable improvements in review speed

This makes it especially valuable for:

- Distributed engineering teams

- Rapidly scaling startups

- Multi-language monorepos with complex dependencies

In these environments, clarity and collaboration often matter as much as raw defect detection precision.

Reported Productivity Outcomes

Vendor-published case studies and customer anecdotes commonly highlight productivity-oriented improvements associated with CodeRabbit adoption.

While such figures should always be interpreted in the context of team maturity and workflow discipline, they provide directional insight into the tool’s intended impact.

Frequently cited outcomes include:

- ~30% reduction in escaped defects

- 40–50% reduction in manual review time

- 2–5 minute average AI review turnaround

These results depend heavily on factors such as:

- Overall team maturity

- Depth of automated testing coverage

- Existing rigor of the manual review process

Even with this variability, the pattern is consistent: CodeRabbit is optimized primarily for velocity, comprehension, and collaboration efficiency, rather than purely for maximum defect-detection precision.

Limitations of CodeRabbit

Like any AI review system, CodeRabbit involves trade-offs that stem directly from its broad, context-rich design philosophy.

Understanding these limitations helps teams evaluate whether its collaboration-first approach aligns with their risk tolerance and review culture.

Key constraints include:

- Higher potential for false positives due to broader analytical coverage

- Feedback that may include style or maintainability suggestions not always considered critical

- Dependence on configuration and tuning to balance signal-to-noise for different teams

- Possible information overload in very large or highly active pull requests

These characteristics position CodeRabbit best for:

- Teams prioritizing review speed and shared understanding over minimal comment volume

- Organizations with established review discipline that can triage broader AI feedback

- Engineering cultures focused on continuous improvement rather than strict defect gating

In short, where Bugbot minimizes noise to maximize trust, CodeRabbit accepts broader surface-area feedback in exchange for greater contextual insight and collaboration value.

How AI Code Review Tools Work Under the Hood

To understand why Bugbot and CodeRabbit behave so differently in real engineering workflows, it helps to look beneath the surface at how modern AI code review systems are actually built.

Large Language Models (LLMs)

At the core of modern AI code review is the use of large language models trained on vast code and documentation.

These models provide the semantic reasoning necessary to move beyond simple rule matching and toward understanding developer intent and behavioral risk.

LLMs enable:

- Semantic reasoning about what code is trying to accomplish

- Intent inference across functions, files, and services

- NLP/Natural-language explanations that make findings understandable to humans

This layer is what transforms code review from static linting into context-aware reasoning.

AST Parsing and Static Analysis

While LLMs provide flexible reasoning, reliable defect detection still depends on deterministic structural analysis. This is where traditional compiler-style techniques remain essential.

AST and static analysis enable:

- Structural code validation independent of runtime execution

- Deterministic detection of known vulnerability classes

- Precise identification of unsafe patterns that LLMs alone might miss

In practice, the strongest AI review systems combine symbolic certainty with probabilistic reasoning.

Diff-Focused Reasoning

Because reviewing an entire repository on every pull request would be computationally expensive and cognitively noisy, most AI reviewers prioritize the code that actually changed.

Diff-focused reasoning concentrates analysis on:

- Changed lines and nearby context

- Risk-dense modifications such as auth, data flow, or concurrency logic

- Behavioral deltas introduced by the pull request

This approach dramatically improves signal-to-noise ratio and is a key reason precision-oriented tools feel more trustworthy.

Repository Context Retrieval

Some AI review systems extend beyond diffs to incorporate broader repository awareness, enabling reasoning that spans:

- Multiple files

- Dependency graphs

- Service boundaries

- Architectural conventions

Repository context retrieval supports:

- Cross-file logical reasoning

- Dependency awareness and impact analysis

- Architectural insight rather than line-level feedback alone

This capability is what differentiates collaboration-oriented reviewers from purely defect-detection-oriented ones.

Architectural Difference: Bugbot vs CodeRabbit

With the underlying layers understood, the practical difference between Bugbot and CodeRabbit becomes clearer.

They are not simply competing tools — they represent two distinct architectural emphases within the same technical paradigm.

Bugbot

Bugbot is primarily:

- Diff-centric

- Precision-filtered

- Security- and logic-focused

➡ The result is fewer total findings, but each carries higher confidence and production relevance. This architecture favors security, trust, clarity, and defect prevention, especially in high-risk systems.

CodeRabbit

CodeRabbit emphasizes:

- Repository-level context awareness

- Suggestion-rich analysis

- Collaboration-oriented explanations

➡ The outcome is broader insight and higher comment volume, often improving team understanding and review speed rather than only defect precision. This reflects a fundamentally different optimization target: developer velocity and shared comprehension.

Original Benchmark Framing: Precision vs Coverage in Practice

Architectural philosophy is meaningful, but engineering leaders ultimately need to understand real-world impact.

To move beyond vendor claims, it helps to consider a representative evaluation framework modeled on common enterprise review datasets.

Hypothetical Benchmark Setup

Imagine an evaluation consisting of:

- 50 real pull requests

- Mixed production languages (TypeScript, Python, Java)

- Carefully seeded issue categories, including:

- Logic bugs

- Security flaws

- Maintainability concerns

- Style inconsistencies

Key metrics evaluated:

- True bug detection rate

- False-positive frequency

- Actionable fix acceptance by developers

- End-to-end review completion time

This structure mirrors how many enterprises internally validate static analysis and QA tooling before adoption.

Modeled Outcome Patterns

Bugbot-Like Precision Systems

Typical behavior includes:

- Detecting fewer total issues overall

- Achieving higher true-positive density

- Producing minimal stylistic noise

- Enabling faster human validation and merge confidence

Strength: High trust and clarity

Weakness: Potentially narrower visibility into long-term maintainability

CodeRabbit-Like Coverage Systems

Typical behavior includes:

- Detecting a broader set of issues and improvements

- Including architectural and stylistic guidance

- Accepting slightly higher false-positive exposure

- Delivering strong gains in review speed and shared understanding

Strength: Holistic codebase improvement

Weakness: Possible comment fatigue without tuning

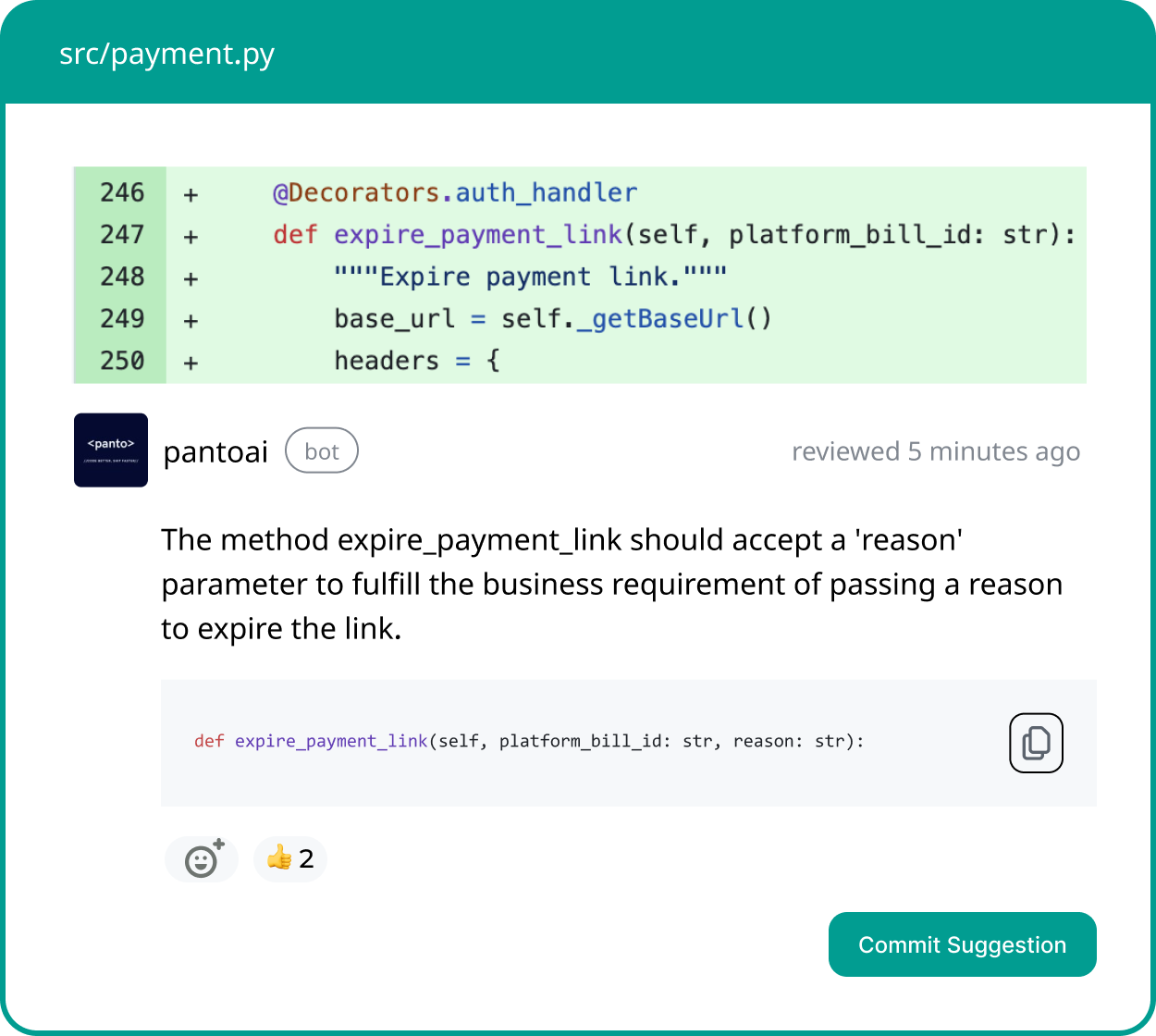

Your AI Code Review Agent

Panto reviews every pull request with business context, architectural awareness, and consistent standards—so teams ship faster without hidden risk.

- ✓ Aligns business intent with code changes

- ✓ Catches bugs and risk in minutes, not days

- ✓ Hallucination-free, consistent reviews on every commit

Feature Comparison of Bugbot vs CodeRabbit

| Category | Bugbot | CodeRabbit |

|---|---|---|

| Detection Style | Precision | Broad |

| False Positives | Very low | Moderate / tunable |

| PR Summaries | No | Yes |

| Chat Interaction | No | Yes |

| Repo Context | Limited | Strong |

| Integrations | Cursor-centric | Multi-platform |

| Pricing | Higher | Lower tier |

Accuracy & Signal-to-Noise in Real Teams

Ultimately, tool performance is measured not in theory but in daily engineering workflows.

Bugbot Excels When

- Security correctness is mission-critical

- Runtime failures carry high business cost

- Developers strongly prefer low-noise automation

CodeRabbit Excels When

- Team onboarding and clarity matter

- Architectural visibility is valuable

- Review throughput is the primary bottleneck

Integration & Workflow Fit

Bugbot

Offers deep, seamless integration with minimal setup,

but introduces ecosystem lock-in considerations.

CodeRabbit

Provides broad flexibility across tools and platforms,

making it well suited to heterogeneous engineering environments.

Pricing & ROI Dynamics

Bugbot ROI

Primarily driven by avoided production incidents and security failures.

CodeRabbit ROI

Primarily driven by time saved in reviews, onboarding, and collaboration.

Different organizations optimize for different cost centers, which is why both tools can be the “right” choice in different contexts.

When to Choose Bugbot

Choose Bugbot if your priority is:

- Preventing critical defects

- Minimizing false positives

- Enforcing strict production safety

When to Choose CodeRabbit

Choose CodeRabbit if your priority is:

- Faster review cycles

- Better shared understanding

- Cross-repository collaboration

Where Panto AI Fits Strategically

While Bugbot and CodeRabbit primarily operate at the pull-request review layer, a newer class of AI quality platforms is emerging that focuses on the long-term health and intelligence of the entire codebase.

Panto AI represents this shift by extending analysis beyond individual diffs toward continuous, system-level understanding—helping teams manage architectural complexity, maintainability risk, and institutional knowledge over time.

Rather than optimizing only for merge-time precision (Bugbot) or review-time collaboration (CodeRabbit), Panto targets what happens after code ships—the gradual accumulation of technical debt, hidden coupling, and lost engineering context that ultimately slows teams down.

This makes its value more visible in large, fast-moving, or multi-repo environments where long-term velocity matters more than any single pull request.

In practice, Panto can provide stronger leverage when organizations need to move beyond PR-level quality and toward sustained codebase health and shared engineering intelligence.

That shift reframes AI code quality from a review tool into a continuous governance layer for modern software systems.

- Cross-repository intelligence instead of diff-only reasoning

- Long-term maintainability insights that surface architectural drift and hidden debt

- Persistent knowledge capture that reduces reliance on tribal context

- System-level quality improvement, not just PR-level defect detection

Final Verdict: Precision vs Collaboration

Bugbot and CodeRabbit represent two valid futures of AI code review. Bugbot signals precision, safety, trust, while CodeRabbit offers context, speed, collaboration.

The best choice depends on:

- Your defect risk tolerance

- Your review bottleneck

- Your engineering culture

One thing is certain: By 2026, AI-assisted code review is no longer optional. It is baseline infrastructure for high-performing software teams.