Using recent industry measurements for global downloads and uninstall benchmarks, a realistic 2026 estimate is that roughly 370–380 million apps are uninstalled every single day worldwide.

This number is driven by huge daily install volumes combined with stubbornly high short term uninstall rates. The implication is stark: product teams and web/mobile-QA driven teams cannot treat releases and fixes as one time events. If your quality signals do not scale with release velocity, users will delete your mobile apps and move on.

Key sources for these estimates include AppsFlyer uninstall benchmarks and global app download projections.

How the Estimate is Calculated: Step-By-Step

- Industry trackers place global mobile app downloads at about 299 billion in 2025, a figure commonly used to model near-term 2026 volumes. That converts to an average of about 819 million installs per day when you divide by 365.

- AppsFlyer’s uninstall analysis shows that roughly 46.1% of installs are uninstalled within 30 days, on average across Android markets (iOS uninstall tracking is limited post iOS 15). Using that benchmark gives us a simple way to convert daily installs into daily uninstalls within a 30 day window.

- Multiply the two quantities:

- 299,000,000,000 downloads per year divided by 365 = 819,178,082 installs per day.

- 819,178,082 installs per day times 0.461 = 377,641,096 uninstalls per day (within 30 days of install).

- Rounded and presented as a realistic 2026 range, that is about 370–380 million daily uninstalls.

These are global, production uninstalls attributable to fresh installs and subsequent churn. The number is an estimate intended to be conservative and transparent about assumptions.

What that number actually means

- A daily uninstall count in the hundreds of millions is not an abstract stat. It reflects real user behavior and immediate consequences:

- Users try apps at massive scale, but many uninstall apps quickly when expectations are not met. AppsFlyer and other benchmarks show most uninstalls happen very early, often on Day 1. That means first impressions and onboarding matter more than ever.

- Categories differ. Gaming and dating see higher exploratory installs and higher short term uninstalls. Finance and brand-backed apps typically keep users longer. Country-level differences also matter: developing markets often show higher uninstall rates because of storage, connectivity, and device constraints.

- Platform differences exist. Some analyses show Android uninstall behavior can be materially different from iOS, especially because uninstall attribution is limited on iOS after privacy changes. That complicates cross-platform comparisons.

Where Users are Leaving and Why

Benchmarks and industry studies point to a few key timings and triggers:

- Day 0 and Day 1 are decisive. Significant fractions of uninstalls occur within hours of first use. That includes users who open an app once and delete it. One report puts first-session abandonment as high as 20% or more in many categories.

- Common triggers include crashes, poor performance, confusing onboarding, misleading app store creatives, excessive permissions, and storage or battery concerns. Even small failures in critical flows – login, checkout, media upload – produce outsized churn.

- Marketing-driven installs with weak product fit produce higher uninstall rates. Organic installs tend to stick better because intent is higher. AppsFlyer highlights a consistent gap between organic and non-organic uninstall behavior.

Why this matters to QA and product teams

If roughly a third to a half of installs do not persist beyond a month, the math for acquisition economics, lifetime value, and growth changes dramatically. For QA this translates into three practical imperatives:

- Protect first impressions. Tests and checks that validate onboarding, first open flows, and cold start performance should be prioritized and run extremely early in pipelines. These are the flows that decide Day 0 and Day 1 retention.

- Validate critical device/OS permutations. Because uninstalls spike on specific devices and conditions, deterministic checks across a representative device matrix are essential. Random sampling is not enough.

- Move from reactive firefighting to preventive signals. Instead of waiting for crash reports and user complaints, run pre-release checks that catch the top causes of uninstalls: crashes, timeouts, network edge cases, and permission flows.

Where traditional QA fails at preventing uninstalls

Many teams already use automated tests, but common failure modes mean QA does not stop churn:

- Tests that focus on implementation details rather than business intent become brittle as UI changes. They create noise and false positives that erode trust.

- Slow suites that run overnight are useless for catching onboarding regressions that must be addressed in minutes.

- Lack of context. Tests that pass without validating business rules or performance under real network conditions miss the problems that drive uninstalls.

These gaps explain why high release velocity without a different QA approach still results in large uninstall volumes.

What Works: A Panto QA Style Approach

Based on the retention and uninstall patterns above, a few practical steps reduce uninstall risk measurably:

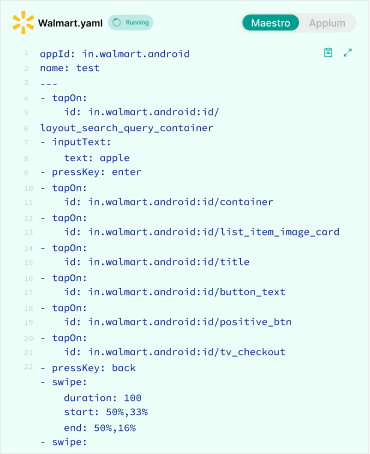

- Deterministic, intent-based tests for first-session flows. Tests should assert business outcomes – successful login, a completed onboarding step, or a purchase confirmation – not fragile DOM or selector conditions.

- Self-healing adaptation for UI drift. When the UI changes but the intent remains, the test should adapt or provide clear diagnostic context rather than flake.

- Fast PR-level feedback. Keep the “must-run-before-merge” suite under a few minutes. Run extended device permutations in parallel so feature velocity is not blocked.

- Device farm integration and network shaping. Validate on battery saver modes, slow networks, and not testing on new devices that produce outsized uninstalls.

- Measurement and attribution. Track uninstall attribution and the timing of uninstalls relative to installs and releases. Correlate spikes with release changes, marketing channels, or device cohorts.

When QA becomes an engine that proactively defends first impressions, the daily uninstall drain reduces and retention improves. That is the fundamental business value.

Short checklist for product and QA leaders

- Instrument uninstall attribution and Day 0, Day 1 metrics by cohort.

- Prioritize automated checks for onboarding, cold start, login, and payment paths.

- Run a representative device matrix for each release and flag regressions by cohort.

- Track false positive and flakiness rates and drive them down.

- Use release tactics – feature flags and canaries – combined with automated checks to limit blast radius.