Panto AI vs. CodeRabbit

One of the best pieces of advice we received when we started was to seek truth in everything we do—whether it's choosing the right problem to solve, building our product and features, or ensuring the highest quality in what we offer. Seeking truth is crucial for solving the right problems and staying on course. It's easy to get carried away by statistics and early metrics, only to find yourself too far off track to make necessary corrections.

As we've discussed in previous blogs, AI has made software development easier than ever. However, this is a double-edged sword—while it accelerates product and feature development, it does the same for your competitors. If you're solving a meaningful problem, you're likely in a competitive market, making it critical to stand out and deliver the best AI PR review solutions.

This raised some key questions:

- Where do we stand?

- How effective is our AI code reviewer?

- Does more funding always lead to a better product?

Seeking truth was essential, so we invested time and effort into building a benchmarking framework to identify the best AI-powered code review tools on the market. We're open-sourcing everything—our framework, the data used, the comments generated, the prompts applied, and the categorization process—so developers can make informed decisions when selecting the best pull request review solutions.

How We Conducted the Benchmark?

To conduct a fair comparison, we signed up with our competitors and reviewed a set of neutral pull requests (PRs) from the open-source community. Each PR was analyzed independently by both Panto AI and CodeRabbit. We at Panto AI then used a large language model (LLM) to categorize the comments into different segments, reflecting how engineers perceive them in a real-world code review process.

To ensure fairness, we at Panto AI have left the categorization entirely to the LLMs.

Key Comment Categories in AI Code Review

We at Panto AI have classified all code review comments into the following categories, ranked by importance from highest to lowest:

Critical Bugs

A severe defect that causes failures, security vulnerabilities, or incorrect behavior, making the code unfit for production. These issues require immediate attention.

Example: A SQL injection vulnerability.

Refactoring

Suggested improvements to code structure, design, or modularity without changing external behavior. These changes enhance maintainability and reduce technical debt.

Example: Extracting duplicate code into a reusable function.

Performance Optimization

Identifying and addressing inefficiencies to improve execution speed, memory usage, or scalability.

Example: Use React.memo(Component) to prevent unnecessary re-renders.

Validation

Ensuring the correctness and completeness of the code concerning business logic, functional requirements, and edge cases.

Example: Checking if an API correctly handles invalid input or missing parameters.

Note: While valuable, repeated validation comments can become frustrating when they appear excessively.

Nitpick

Minor stylistic or formatting issues that don’t affect functionality but improve readability, maintainability, or consistency.

Example: Indentation, variable naming, and minor syntax preferences.

Note: Engineers often dislike these being pointed out.

False Positive

A review comment or automated alert that incorrectly flags an issue when the code is actually correct.

Example: A static analysis tool incorrectly marking a variable as unused.

Note: False positives waste engineers’ time and defeat the purpose of automated code reviews.

Benchmarking Methodology

To ensure a fair comparison, we followed these principles:

- We compiled a list of all open-source PRs, 17 to be precise and reviewed each of them with both Panto AI and CodeRabbit.

- We used OpenAI's

o3-miniAPI (best for coding) to classify comments, rather than relying on human judgment, as code reviews are inherently subjective and prone to bias. - We eliminated words or tags like Important, Security, or Critical from bot-generated comments to prevent the LLM from being influenced by predefined labels.

By open-sourcing this benchmark, we at Panto AI aim to provide complete transparency and help developers choose the best AI-powered code review tool for their needs. Let the results speak for themselves

| Category | Panto AI | CodeRabbit |

|---|---|---|

| Critical Bugs | 12 | 10 |

| Refactoring | 14 | 8 |

| Performance Optimization | 5 | 1 |

| Validation | 0 | 8 |

| Nitpick | 3 | 13 |

| False Positive | 4 | 2 |

| Total | 38 | 42 |

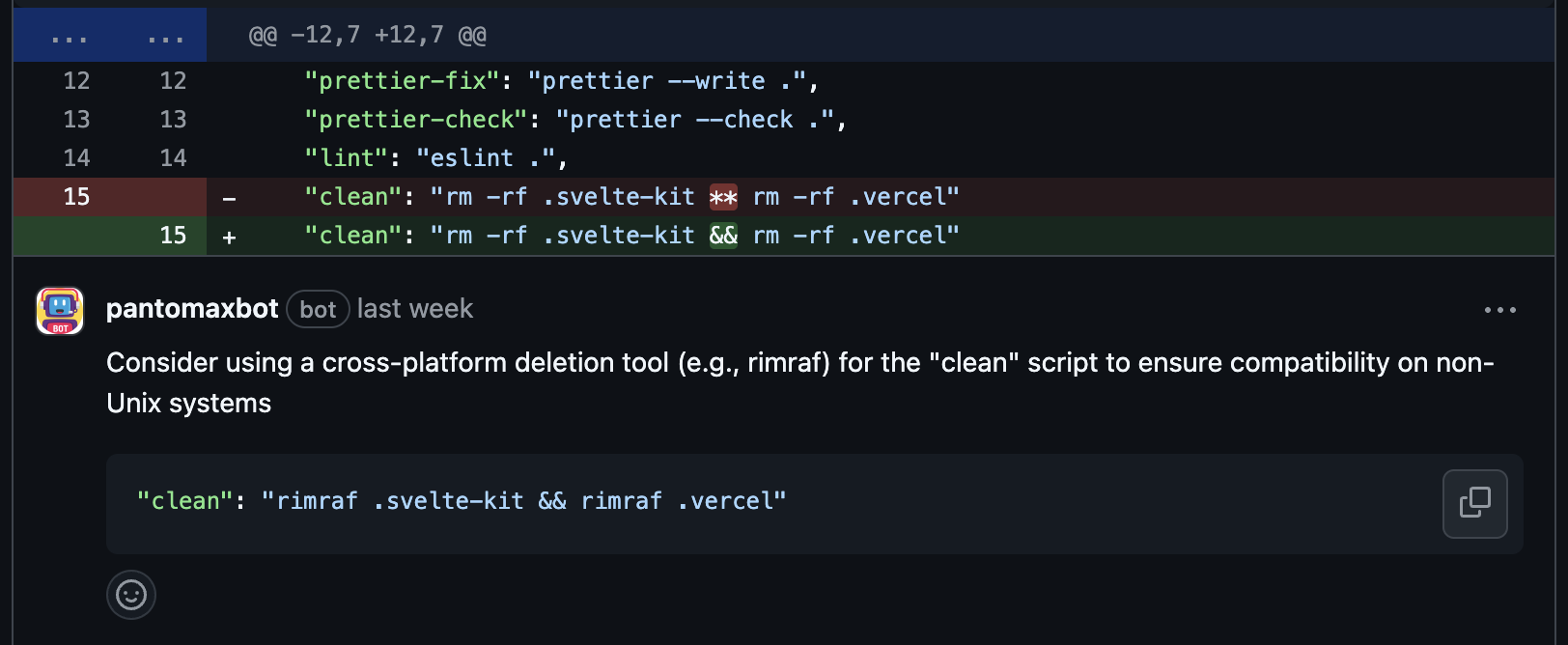

Panto has specialized our agentic workflow to address what’s most important. We don’t do graphs or poems. We are a no-noise, no-fluff PR review agent who focuses on flagging critical bugs. Panto AI is 20% better than CodeRabbit in flagging critical bugs.

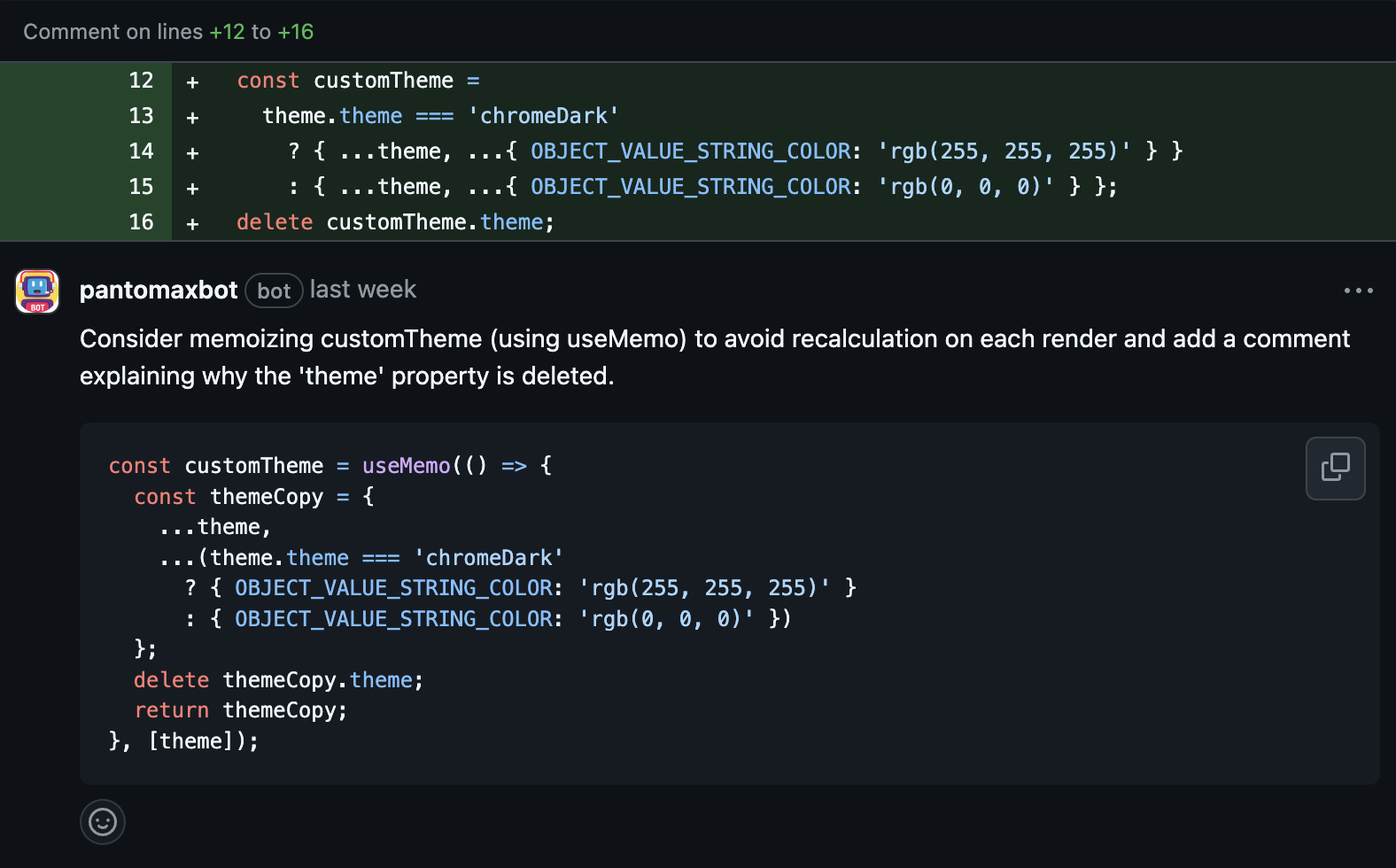

Panto has spent a lot of time building agentic workflows to help organizations ship quality code. Refactoring is a crucial part for us, and we help engineers identify key parts of the code to make it suitable for scale. Panto AI is 75% better than CodeRabbit at flagging refactoring suggestions.

We have optimized Panto AI to have a lens that ensures the right amount of resources are consumed when code is built for scale. Panto is 5X better than CodeRabbit in performance optimization for the same set of 17 pull requests evaluated.

Code reviews are subjective, and one of the biggest enemies of AI automating code reviews are comments that nudge engineers to “ensure,” or “verify.” These usually aren’t actionable. Panto has a filter layer that lets these as inline comments only if they are very, very critical in the summary of changes or mostly rejects them. In the sample set considered, Panto was very deterministic and did not provide any “good to have a.k.a validation” suggestions

The most hated code reviews by engineers, irrespective of whether they are from bots or humans, are nitpicking when it’s not required. Panto is a no-noise, no-fluff code review bot. CodeRabbit provided 3 times more nitpicking comments on the same set of 17 pull requests evaluated.

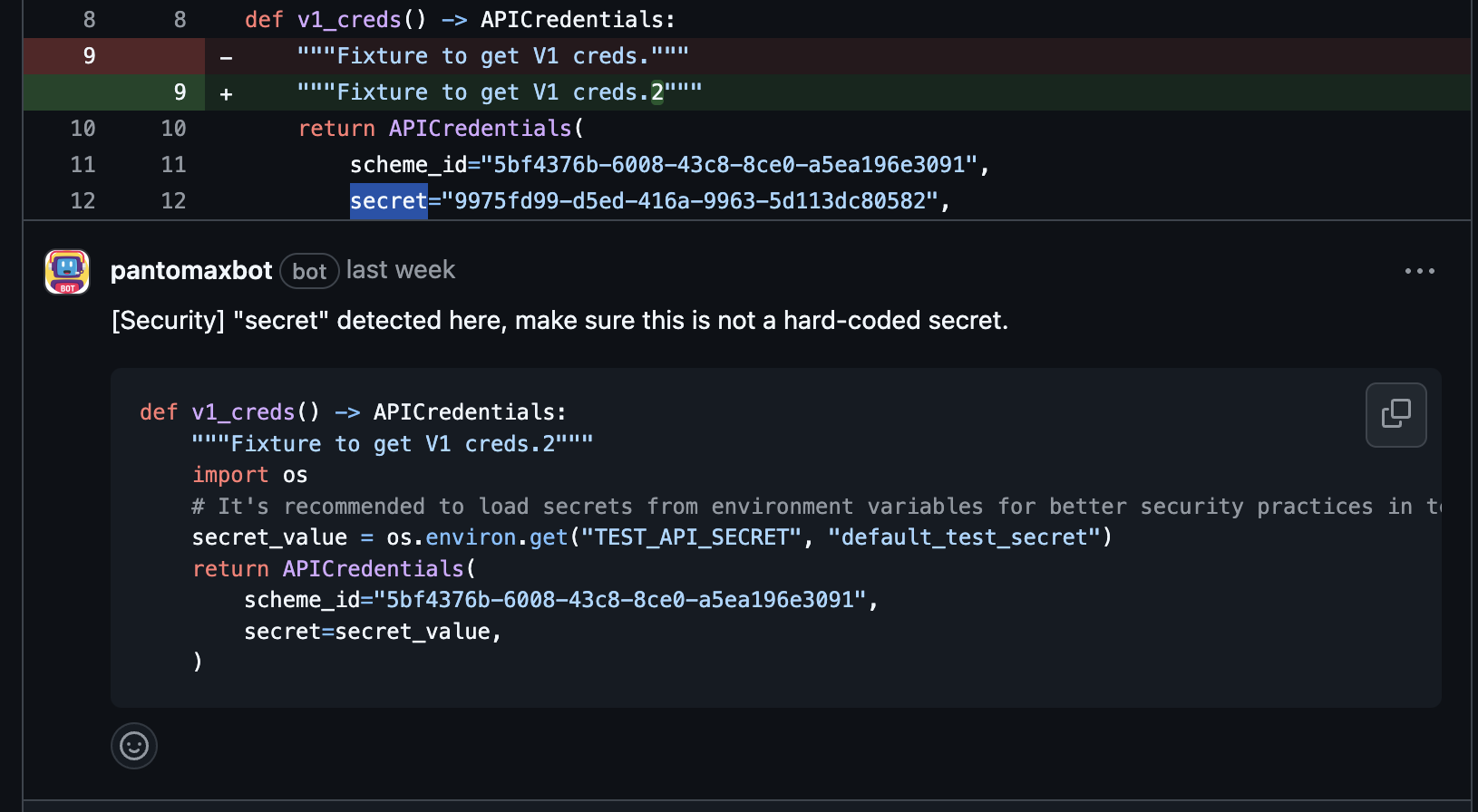

False positive comments are criminal. We do have some scope to improve here, and we are on it. We see CodeRabbit as being well with its filtering engine to not let any false positive comments out. At Panto, we are tying our laces on this to get better.

Speed is a superpower. I've never met a dev who said, "I can wait." We observed that CodeRabbit can take up to 5 minutes to provide comments on a raised PR, whereas our reviews are typically delivered in less than a minute. This may seem like a small difference, but waiting 5 minutes to fix issues can be incredibly frustrating.

How Have We Reached Where We Are?

A clear thought process of just doing great at providing actionable feedback to developers: At Panto, we never had the time nor the mindspace to think of doing poems, graphs, or sequence diagrams. Something that we don’t need as devs, we won’t be shoving it to our customers either.

If you are a dev who is thinking of having an extra pair of hands to review your code, you know who to choose. If you are an engineering manager or CTO, thinking of getting an AI coding agent to save code review time and effort for your team - Here’s a framework for you to make data-backed decisions.

Appendix

- Open Source Framework

- Open data - Excel Sheet

- Sheet 1: Analysis Summary

- Sheet 2: Data, Repo Links, Comments, Classifications

Your AI code Review Agent

Wall of Defense | Aligning business context with code | Never let bad code reach production

No Credit Card

No Strings Attached

How to Reduce Pull Request Cycle Time: 5 Proven Strategies for Faster Code Reviews

Pull request cycle time is a critical metric for team velocity. This article explains why PRs get stuck and presents five proven strategies—from keeping PRs small to setting clear SLAs—that high-performing engineering teams use to accelerate their code review process.

Aug 18, 2025

CodeRabbit vs. Greptile: AI Code Review Tools Compared

In a detailed comparison of AI code review tools, CodeRabbit and Greptile show distinct strengths. This article breaks down their performance on bug detection, feedback quality, and workflow to help teams decide which tool is the best fit for their development practices.

Aug 14, 2025

CodeRabbit Alternatives

CodeRabbit is a popular AI code review tool, but as codebases and teams grow, developers often need more advanced features. This article explores six powerful alternatives to CodeRabbit, detailing their strengths, pricing, and integrations to help teams find the best fit for their needs.

Aug 12, 2025

Vibe Coding vs Vibe Debugging: The Modern Developer’s Reality

The rise of AI has introduced "vibe coding," where developers use natural language to generate code quickly. However, this speed must be balanced with "vibe debugging," the AI-assisted process of finding and fixing issues. This article explores how modern developers use both approaches and how tools like Panto AI help bridge the gap to ensure quality and efficiency.

Aug 12, 2025

Top 7 AI Coding Tools 2025

AI-assisted software development is no longer a futuristic concept, but a powerful reality. This guide breaks down the top 7 AI coding tools for 2025, categorizing them by function—from code completion and pair programming to code review and learning environments—to help developers choose the right tools to boost their productivity and code quality.

Aug 11, 2025

Why Bad Code Review Advice Still Hurts Your Team — and How Context-Driven AI Transforms Reviews

Bad code review habits, from nitpicking to rubber-stamping, cause real harm to engineering teams. This article debunks common code review myths and shows how context-driven AI tools like Panto provide a smarter, more efficient way to review code, reduce bugs, and boost team morale.

Aug 07, 2025